Scripting vPC hosts (ESXi / Hyper-V), switches, ports and policies creation on ACI with Ansible Playbooks

In this post we are going to setup Ansible to help us add hosts using vPC to the ACI fabric. One aspect of PC/vPC vs. a normal access port in ACI is that for PC/vPC a few components cannot be shared whereas with an access port they can be shared. There are a quite a few steps to take to add a new PC/vPC connected host to the fabric, the host can be a hypervisor such as ESXi or HyperV or just a bare metal server requiring PC/vPC connectivity into the fabric. In this post we create the script for vPC although modifying for PC is not difficult and only requires a few changes. The first step is to ensure we understand the object model and the intention of our script. We do this by creating a diagram of the model and how we expect it to work.

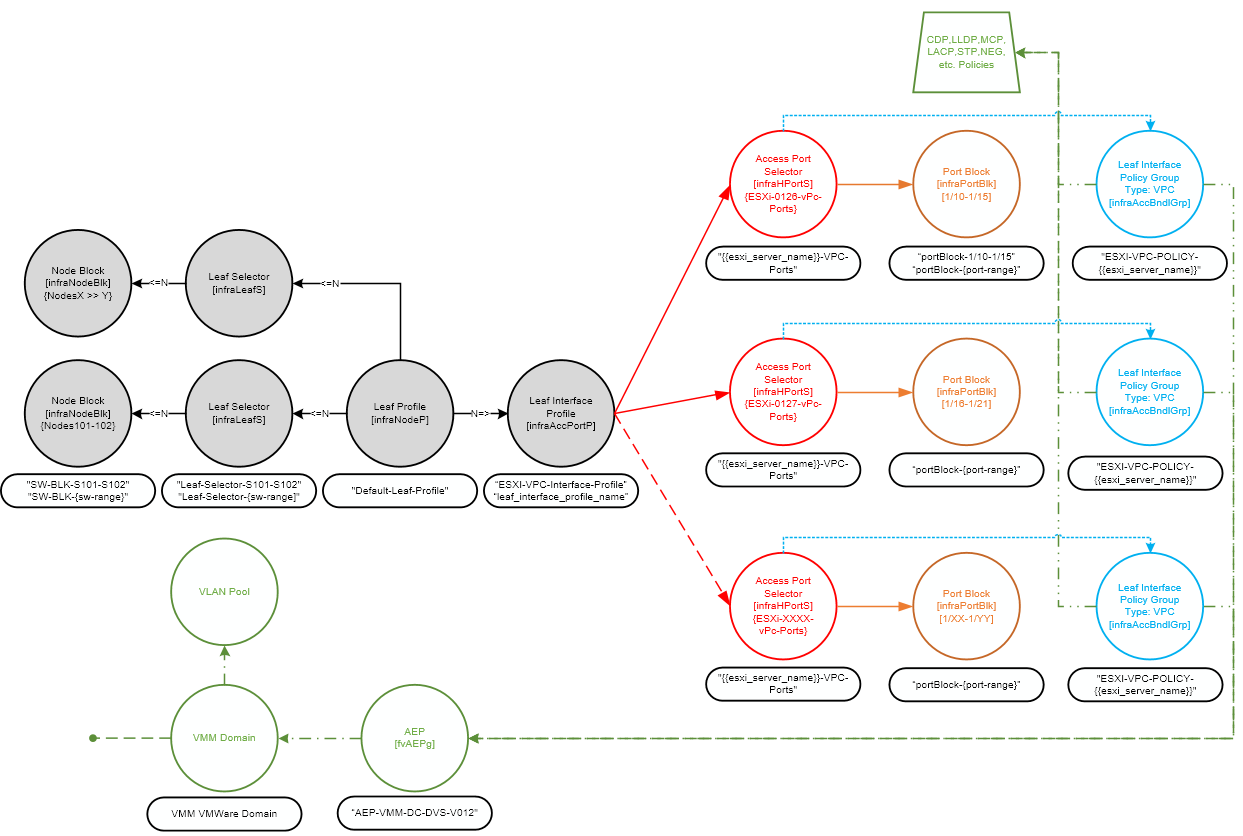

As part of any design and including this one for our script we create a diagram which depicts the object model and how we expect it to fit together, also showing where variables are applied to object names (enclosed in {{}} or {}) and static names for chared objects. We also define what we expect from the script, we expect that following the running of the Ansible playbook that the ACI fabric will have a number of ports configured on two switches in a vPC bundle for the ESXi host to connect in a pre-determined consistent configuration. Our goal is for the ACI fabric to make the ESXi host available to vCenter for hosting virtual machines and connectivity to EPGs.

Reviewing the diagram we created, we can identify the APIC managed objects (MO) we need to create and roughly what the relationships are. In some cases there are inter-connecting MO’s called relationship MO’s and we can look these up in the Cisco APIC MIM Reference when developing the scripts and left out of this diagram for clarity. For this design we will be creating all the objects shown in the diagram expect for the MO’s shown in green. These MO’s in green are outside of the scope of this script which focuses on connecting hosts to the fabric (in this case the ESXi hosts). The AEP, VMM Domain and VLAN pool should already be present on the APIC. The AEP will be referenced in the script by the ‘Leaf Interface Policy Group’ MO’s (one per host). The green trapezoid references a group of individual policies for interface configuration like CDP, LLDP, Link Negotiation and importantly LACP. These policies are reused for every ‘Leaf Interface Policy Group’ so we need to ensure they are created so we can reference them by name in the script when building the ‘Leaf Interface Policy Group’s. For this I have created an XML to import containing the policies referenced by the Ansible playbook script, these policies are generally configured once on APIC install / initial configuration and don’t require change afterwards and is a good idea to set these up on initial configuration to avoid overuse of ‘default’ settings with a name of ‘default’ which are not descriptive and don’t describe an intent.

All the scripts, modules and XML files for this post can be found on github. The XML file can be easily imported into the APIC using Postman. If you need to setup Ansible refer to the posts here.

The remaining objects need to be created by the playbook and scripts. The objects in gray need to be created only once as they will be shared by all the configured hosts. These objects configure the switches to use, in this case ESXi hosts will always be connected to nodes 101 & 102. There are additional [infraNodeBlk] & [InfraLeafS] objects shown on the diagram to show where we can add additional switches if required to scale the environment for additional ESXi hosts. Recall that when we specify a port number in the ‘Access Port Selector’ ‘Port Block’ (i.e. E1/2) and bind this interface to switches (i.e. 101 & 102) via the ‘Leaf Interface Profile’ that we are configuring and binding port E1/2 on both switches (101 & 102) to the same policy and the interfaces cannot have separate policies unless we split the switches across different Leaf Selector & Profiles, but for vPC we want to bind the same port on both switches to the same policy for consistency.

We now know we need to create the following MO’s on the APIC;

- infraNodeBlk (Node Block) [x1]

- infraLeafS (Leaf Selector) [x1]

- infraNodeP (Leaf Profile) [x1]

- infraAccPortP (Leaf Interface Profile) [x1]

- infraHPortS (Access Port Selector) [Per Host]

- infraPortBlk (Port Block) [Per Host]

- infraAccBndlGrp (Leaf Interface Policy Group) [Per Host]

To create these MO’s / configuration with we have a few choices for implementation namely – Ansible, APIC REST (XML/JSON) & Cobra, we of course will be using Ansible playbooks but will require additional support in the form of a Ansible module to fill in gaps in existing scripts to configure the APIC from Ansible.

- Python & Cobra

- For Ansible modules, whilst a very good and flexiable option for creating python scripts avoiding direct creation of XML it does mean that the Cobra library must be updated on the Ansible machine when updates are released for new versions of code. It also moves away from the typical Ansible connection model using url_fetch.

- Python & REST (XML or JSON)

- Cisco (and other vendors) provide a REST service permitting XML or JSON formats for configuration. The APIC is no exception. The Ansible playbook can utilize a Cisco module to send raw XML/JSON to the APIC for configuration of a object or objects. We have seven objects to create each of which would be a task with parameters. This would be a long and difficult to maintain file.

- Ansible Modules

- Ansible is created with Python and also uses third party scripts (modules) to provide functionality through playbook tasks. The modules are provided by Ansible, vendors and the community and can be written by anyone. Cisco have provided some modules for individual object creation such as Tenants, Bridge Domains, etc but the above list of objects we have there are no existing modules to provide all these configuration tasks.

We want to keep the playbook easily manageable with the script specifics in the playbook. If we can make the rest of the code generalized then its worth creating a Anisble module so it can be reused with playbook parameter changes only. For example, change the script from ESXI to HyperV hosts or to connect external switches to the fabric, anything that requires vPC connectivity. So we will create an Ansible module, playbook and an external configuration file, more on the external configuration file later, this aids in external systems automating host creation or users providing only the minimal required parameters in small config file which the playbook will use to configure itself and therefore the module script.

So we have the following chain:

JSON Configuration File ==> Ansible Playbook ==> Ansible Module ==> APIC

Creating the Ansible Module

Starting with the Ansible module which will be doing the work of creating the MO\configuration on the APIC, we break this down into a few basic functions in the module. Each of these functions requires specific information to create the configuration. We need to understand what information is required to be passed to the module in order to be able to configure the APIC. The following discusses the functions created in the module and what each function requires to complete its task.

- apicLogin

- This function provides login code in to the APIC and return if this fails. This function means we need information on the APIC IP, username and password passed to the script, other boolean variables we require are timeout, use_proxy, use_ssl, validate_certs. These additional parameters dictate how the connection to the APIC is made. The validate_certs parameter sets a check against SSL certificates, if TRUE, then the SSL certificate must be signed by a valid authority where the authority certificate is installed on the Ansible machine. The APIC generates a self signed certificate on install so will be invalid, setting validate_cert to FALSE prevents the script from failing due to the certificate being. Be sure you know the risks before setting to FALSE. We set to false in this script as we are running against an APIC that is using locally signed certificates.

- apicPost

- Sends the configuration request to the APIC. The APIC IP is required which we identified in the apicLogin function.

- createPolicyGroup

- This function creates the’ Leaf Interface VPC Policy Group’ [infraAccBndlGrp] per host, which references the AEP and the individual policies as discussed. We require the name of the policy group to be created, in this case this the name will be “ESXI-VPC-POLICY-” + host name (i.e. ESXI-0122). We also need the names of each policy required. This is a long list of parameters required by this function in the module:

- Required Parameters;

- Server Host Name

- #–Policies–

- infraRsLacpPol

- infraRsHIfPol

- infraRsCdpIfPol

- infraRsMcpIfPol

- infraRsLldpIfPol

- infraRsStpIfPol

- infraRsStormctrlIfPol

- infraRsL2IfPol

- infraRsL2PortSecurityPol

- infraRsQosDppIfPol

- infraRsQosEgressDppIfPol

- infraRsQosIngressDppIfPol

- infraRsMonIfInfraPol

- infraRsFcIfPol

- infraRsQosPfcIfPol

- infraRsQosSdIfPol

- #– AEP —

- infraRsAttEntP *** Note the script module assumes the AEP name with a prefix of “AEP-VMM-” and a suffix of the VMM domain.

- createInterfacePolicy

- This function creates the ‘Leaf Interface Profile’ [infraAccPortP], ‘Access Port selectors’ [infraHPortS] and ‘Port Blocks’ [infraPortBlk]. The ‘Leaf Interface Profile’ is created once and shared for all hosts created by this function. The switches MO reference this MO. This MO also holds all the ‘Access Port Selector’s and ‘Port Blocks’ created per host. So in terms of parameters we require the following for each object:

- Leaf Interface Profile

- name

- description

- Access Port Selector

- name

- description

- type [ALL or range]

- reference [dn] to the Leaf Interface Policy Group created in the previous function

- Port Block

- Module/Port => Module/Port (range if specified above)

- Leaf Interface Profile

- This function creates the ‘Leaf Interface Profile’ [infraAccPortP], ‘Access Port selectors’ [infraHPortS] and ‘Port Blocks’ [infraPortBlk]. The ‘Leaf Interface Profile’ is created once and shared for all hosts created by this function. The switches MO reference this MO. This MO also holds all the ‘Access Port Selector’s and ‘Port Blocks’ created per host. So in terms of parameters we require the following for each object:

- createSwitchProfile

- This function creates the MO’s related to selecting the switches to be used. These are the ‘Leaf Profile’ [infraNodeP], ‘Leaf Selector’ [infraLeafS], ‘Node Block’ [infraNodeBlk]. These MO’s only need to be created once as they are reused for all hosts created with this script for vPC as each configured host will connect to the same port(s) on all configured switches. The script does rerun the object configuration on the APIC, we could check to see if the objects exist or rerun the code and let the APIC make any updates which there should not be for this particular use case where this was deployed but adding in a check for existing before updating is possible. The parameters we require are:

- Leaf Profile

- name

- reference [dn] to the Leaf Interface Profile we created in the previous step

- Leaf Selector

- name

- type [ALL, range]

- Node Block

- switch node id => switch node id (range if specified above)

- Leaf Profile

- This function creates the MO’s related to selecting the switches to be used. These are the ‘Leaf Profile’ [infraNodeP], ‘Leaf Selector’ [infraLeafS], ‘Node Block’ [infraNodeBlk]. These MO’s only need to be created once as they are reused for all hosts created with this script for vPC as each configured host will connect to the same port(s) on all configured switches. The script does rerun the object configuration on the APIC, we could check to see if the objects exist or rerun the code and let the APIC make any updates which there should not be for this particular use case where this was deployed but adding in a check for existing before updating is possible. The parameters we require are:

So we need to have the following data\variables\parameters passed to the script in parameters, this following section is a portion of the start of the Ansible module which checks the required data\variables\parameters have been passed to the script and if not sets defaults where possible. When we talk about data\variables\parameters being passed to the script, these come from the playbook and is passed to the module script by Ansible when it parses the playbook during execution of the playbook.

hostname=dict(type='str', required=True, aliases=['host']),

username=dict(type='str', default='admin', aliases=['user']),

password=dict(type='str', required=True, no_log=True),

timeout=dict(type='int', default=30),

use_proxy=dict(type='bool', default=True),

use_ssl=dict(type='bool', default=True),

validate_certs=dict(type='bool', default=True),

# Config Params

interface_profile_name=dict(type='str', required=True),

interface_profile_description=dict(type='str', required=False),

access_port_selector_name=dict(type='str', required=True),

access_port_selector_description=dict(type='str', required=False),

access_port_selector_type=dict(choices=['ALL', 'range'], type='str', default='range'),

access_policy_group_name=dict(type='str', required=True),

access_policy_group_fexid=dict(type='int', default=101),

# ports

port_block_from_card=dict(type='int'),

port_block_from_port=dict(type='int'),

port_block_to_card=dict(type='int'),

port_block_to_port=dict(type='int'),

# switches / switch profile

switch_profile_name=dict(type='str', required=True),

switch_selector_name=dict(type='str', required=True),

switch_selector_type=dict(choices=['ALL', 'range'], type='str', default='range'),

switch_block_from=dict(type='str', required=True),

switch_block_to=dict(type='str', required=True),

# policy group

infraRsLacpPol=dict(type='str', default='default'),

infraRsHIfPol=dict(type='str', default='default'),

infraRsCdpIfPol=dict(type='str', default='default'),

infraRsMcpIfPol=dict(type='str', default='default'),

infraRsLldpIfPol=dict(type='str', default='default'),

infraRsStpIfPol=dict(type='str', default='default'),

infraRsStormctrlIfPol=dict(type='str', default='default'),

infraRsL2IfPol=dict(type='str', default='default'),

infraRsL2PortSecurityPol=dict(type='str', default='default'),

infraRsQosDppIfPol=dict(type='str', default='default'),

infraRsQosEgressDppIfPol=dict(type='str', default='default'),

infraRsQosIngressDppIfPol=dict(type='str', default='default'),

infraRsMonIfInfraPol=dict(type='str', default='default'),

infraRsFcIfPol=dict(type='str', default='default'),

infraRsQosPfcIfPol=dict(type='str', default='default'),

infraRsQosSdIfPol=dict(type='str', default='default'),

infraRsAttEntP=dict(type='str', default='default')

There is one more check made in regards to data passed to the module, as shown in the section below the code states that if the “access_port_selector” parameter is set to “range” then the “port_block_***” variables must be passed too, the same with the switches in “switch_selector_type”. If set to “ALL”, this translates to all ports or switches and does not require port or switch numbers.

mod = AnsibleModule(

argument_spec=argument_spec,

supports_check_mode=True,

required_if=[

['access_port_selector_type', 'range',

['port_block_from_card',

'port_block_from_port',

'port_block_to_card',

'port_block_to_port' ]],

['switch_selector_type', 'range',

['switch_block_from',

'switch_block_to']]

]

)

Each of the create functions essentially creates the data in JSON format which is then submitted to the APIC using apicPost. The POST URL is the same for all submissions as the JSON structure starts from polUni. For example the module generated JSON formatted data to create the configuration for switches 110 & 111 connected to a ‘Leaf Interface Profile’ called “ESXI-VPC-Interface-Profile” is;

{

"polUni": {

"attributes": {},

"children": [

{

"infraInfra": {

"attributes": {},

"children": [

{

"infraNodeP": {

"attributes": {

"descr": "",

"name": "Default-Leaf-Profile"

},

"children": [

{

"infraLeafS": {

"attributes": {

"descr": "",

"name": "Leaf-Selector-S101-S102",

"type": "range"

},

"children": [

{

"infraNodeBlk": {

"attributes": {

"descr": "",

"from_": "101",

"name": "SW-BLK-S101-S102",

"to_": "102"

}

}

}

]

}

},

{

"infraRsAccPortP": {

"attributes": {

"tDn": "uni/infra/accportprof-ESXI-VPC-Interface-Profile"

}

}

}

]

}

}

]

}

}

]

}

}

There are some specific requirements for Ansible modules which are documented here. The module can be stored in the same directory as the playbook or in the Ansible directory under pythons directory structure. On Ubuntu this directory is located at “/usr/lib/python2.7/dist-packages/ansible/modules”. Either drop your module into one of the existing folders or create your own folder and drop the module in there. This folder structure and all folders and files below are included in the module search when a playbook is run. With our Ansible module created we now need to create the playbook. Our file is saved in a directory called “/usr/lib/python2.7/dist-packages/ansible/modules/custom/” with a file name of “aci_vpc_policy_sw_int_setup.py”.

Creating the Ansible Playbook

The Ansible playbook uses the YAML format for consistency across all playbooks so whether you are using a module written for the Cisco APIC, VMware vCenter, Microsoft Hyper-V, F5, etc the format will always be the same, although the parameters required will of course be different. So we know what our parameters are for our module, all modules should follow the documentation standard so if you need to know what the parameters, you know where the modules are stored you can open the module file up and take a look at the documentation section or if the modules are included with the Ansible build they will be additionally documented here.

The playbook can be placed in any directory, Ansible have created a page to discuss the recommended directory structure certainly for more complicated setups with roles etc, you can read the documentation on this here. In this case as we have a simple playbook, we will use the default directory as stated in the documentation “/etc/ansible/playbooks/” and call our file “create_esxi_vpc_host.yml”.

In this file we will specify the APIC, the module we want to use we just created and all the parameters the module needs to execute its task of creating the configuration we require. The full playbook follows.

# Playbook to create ESXi Hosts

#

# ansible-playbook create-esxi-vpc-host.yml --extra-vars "@create-esxi-vpc-host-params.json"

#

# Requirements:

# VMM Domain & DVS Created

# VMware Data Center created on vCenter matching above

# VMware DC Cluster created on vCenter

#

#

- name: ESXi Host VPC Interface Setup

hosts: apic

connection: local

gather_facts: no

vars:

# comment out as external JSON in-use

#esxi_server_name : "ESXI-0102"

#vcenter_dvs : "DC-DVS-V012"

#leaf_interface_profile_name : "ESXI-VPC-Interface-Profile"

#port_from_card : 1

#port_from_port : yy

#port_to_card : 1

#port_to_port : yy

#leaf_switch_from : xxx

#leaf_switch_to : xxx

tasks:

- name: Leaf Profile Setup (ESXi VPC Host Specific)

aci_vpc_policy_sw_int_setup:

validate_certs: no

hostname: "{{ inventory_hostname }}"

username: "{{ aci_username }}"

password: "{{ aci_password }}"

# Create Specific ESXi Host VPC Policy Group

#-- Port Policy --

# These individual policies [infraRs*Pol] must exist in the APIC

# Replace policy names where required with other existing policy. i.e. If LACP MAC Pinning is Not Required.

infraRsLacpPol : "LACP-MACPIN"

infraRsHIfPol : "LINK-POLICY-NEG-OFF"

infraRsCdpIfPol : "CDP-OFF"

infraRsMcpIfPol : "MCP-ON"

infraRsLldpIfPol : "LLDP-ON"

infraRsStpIfPol : "STP-BPDU-GUARD-FILTER-ON"

infraRsStormctrlIfPol : "STORMCONTROL-ALL-TYPES"

infraRsL2IfPol : "L2-VLAN-SCOPE-GLOBAL"

infraRsL2PortSecurityPol : "PORT-SECURITY-DISABLED"

#-- Data Plane Policy --

infraRsQosDppIfPol : "default"

infraRsQosEgressDppIfPol : "default"

infraRsQosIngressDppIfPol : "default"

#-- Monitoring Policy --

infraRsMonIfInfraPol : "default"

#-- Fibre Channel - FCOE --

infraRsFcIfPol : "default"

infraRsQosPfcIfPol : "default"

infraRsQosSdIfPol : "default"

#-- AEP --

infraRsAttEntP : "uni/infra/attentp-AEP-VMM-{{vcenter_dvs}}"

# Create\Access Generic 'Leaf Interface Profile' [infraAccPortP] For VMware ESXi Hosts

# where same switches are used for selected ports for these ESXi hosts

interface_profile_name : "{{leaf_interface_profile_name}}"

interface_profile_description : "Generic Interface Policy For VMware ESXi Hosts where same switches are used for selected ports for these ESXi hosts"

# Create Specific ESXi Host (Interface) 'Access Port Selector'[infraHPortS] Policy

access_port_selector_name : "{{esxi_server_name}}-VPC-Ports"

access_port_selector_description : "{{esxi_server_name}} VPC Ports"

access_port_selector_type : "range"

# Select The Interfaces That This ESXi Host Will Connect To [infraPortBlk]

port_block_from_card : "{{port_from_card}}"

port_block_from_port : "{{port_from_port}}"

port_block_to_card : "{{port_to_card}}"

port_block_to_port : "{{port_to_port}}"

# Select The Specific ESXi Host 'VPC Interface Policy Group' [infraAccBndlGrp]

access_policy_group_name: "ESXI-VPC-POLICY-{{esxi_server_name}}"

access_policy_group_fexid: 101

# [infraNodeP]

switch_profile_name : "Default-Leaf-Profile"

# [infraLeafS]

switch_selector_name : "Leaf-Selector-S{{leaf_switch_from}}-S{{leaf_switch_to}}"

switch_selector_type : "range"

# [infraNodeBlk]

switch_block_from : "{{leaf_switch_from}}"

switch_block_to : "{{leaf_switch_to}}"

delegate_to: localhost

# uncomment next two lines for debugging

#register: retVal

#- debug: var=retVal

Firstly we have the playbook section naming the play, the hosts section specifies the [apic] section in the ansible hosts file which contains our APIC IP and the [apic:vars] section with the username and password to make things easy for this example.

There is only one task named ‘Leaf Profile Setup (ESXi VPC Host Specific)’ , the next line specifies the module file name (without the py extension) we created and for each parameter required in the module a value is assigned to it. In some cases variables are used in the {{…}} enclosures. These are passed into the playbook with a JSON formatted file on the cli when the playbook is run. Alternatively we can uncomment the ‘vars’ section and amend the values as appropriate which means we wont need to use the external JSON file.

In this design we are using an external JSON file to pass in the variables that change per host. Its a smaller, less detailed file and can be created by an external system (web page etc) without exposing the full playbook outside of the Ansible system. The JSON file looks like this.

{

"_comment_1_" : "these k:v's are usually static for particular host types per designated switch pair/range",

"vcenter_dvs" : "DC-DVS-V012",

"leaf_interface_profile_name" : "ESXI-VPC-Interface-Profile",

"leaf_switch_from" : 101,

"leaf_switch_to" : 102,

"_comment_2_" : "next k:v's are unique per host",

"esxi_server_name" :"ESXI-0130",

"port_from_card" : 1,

"port_from_port" : 5,

"port_to_card" : 1,

"port_to_port" : 8

}

The JSON file just has the details that would change per host, the first set of variables could be moved to the playbook to keep the file focused on the host only but in this case the vCenter domain and switches needed to be changed depending on the host being created.

Running the Playbook

We can now run the Ansible playbook. We have a few ways of running the playbook and passing the required variable data into the execution environment. We will look as a few of these ways from the CLI.

Using the external JSON file

JSON File

{

"_comment_1_" : "these k:v's are usually static for particular host types per designated switch pair",

"vcenter_dvs" : "DC-DVS-V012",

"leaf_interface_profile_name" : "ESXI-VPC-Interface-Profile",

"leaf_switch_from" : 101,

"leaf_switch_to" : 102,

"_comment_2_" : "next k:v's are unique per host",

"esxi_server_name" :"ESXI-0130",

"port_from_card" : 1,

"port_from_port" : 5,

"port_to_card" : 1,

"port_to_port" : 8

}

CLI

ansible-playbook create-esxi-vpc-host.yml --extra-vars "@create-esxi-vpc-host-params.json"

Using the Playbook ‘vars’ section

We need to remove the comments ‘#’ char from the variables in the playbook ‘vars’ section and ensure the variable values are correct for the deployment.

Playbook

vars:

# comment out as external JSON in-use

esxi_server_name : "ESXI-0103"

vcenter_dvs : "DC-DVS-V012"

leaf_interface_profile_name : "ESXI-VPC-Interface-Profile"

port_from_card : 1

port_from_port : 14

port_to_card : 1

port_to_port : 19

leaf_switch_from : 101

leaf_switch_to : 102

CLI

ansible-playbook create-esxi-vpc-host.yml"Using the CLI and playbook ‘vars’ section

We remove the ‘#’ comments in the playbook ‘vars’ section for the variables we wish to set in the playbook. For the variable we want to set on command line we leave them commented and pass the variable values at the command line. You do not need to leave these commented in the playbook but if you forget to enter them at command line the variables values will be used from the playbook possibly causing other configuration issues if the values in the playbook conflict with previous configurations. The example below shows the ‘vars’ have been left uncommented except for the port ranges, these are provided on the cli with the playbook command shown.

Playbook

vars:

# comment out as external JSON in-use

esxi_server_name : "ESXI-0102"

vcenter_dvs : "DC-DVS-V012"

leaf_interface_profile_name : "ESXI-VPC-Interface-Profile"

#port_from_card : 1

#port_from_port : yy

#port_to_card : 1

#port_to_port : yy

leaf_switch_from : 101

leaf_switch_to : 102

CLI

ansible-playbook create-esxi-vpc-host.yml --extra-vars "port_from_card=1 port_from_port=20 port_to_card=1 port_to_port=23" APIC Configuration Validation (GUI)

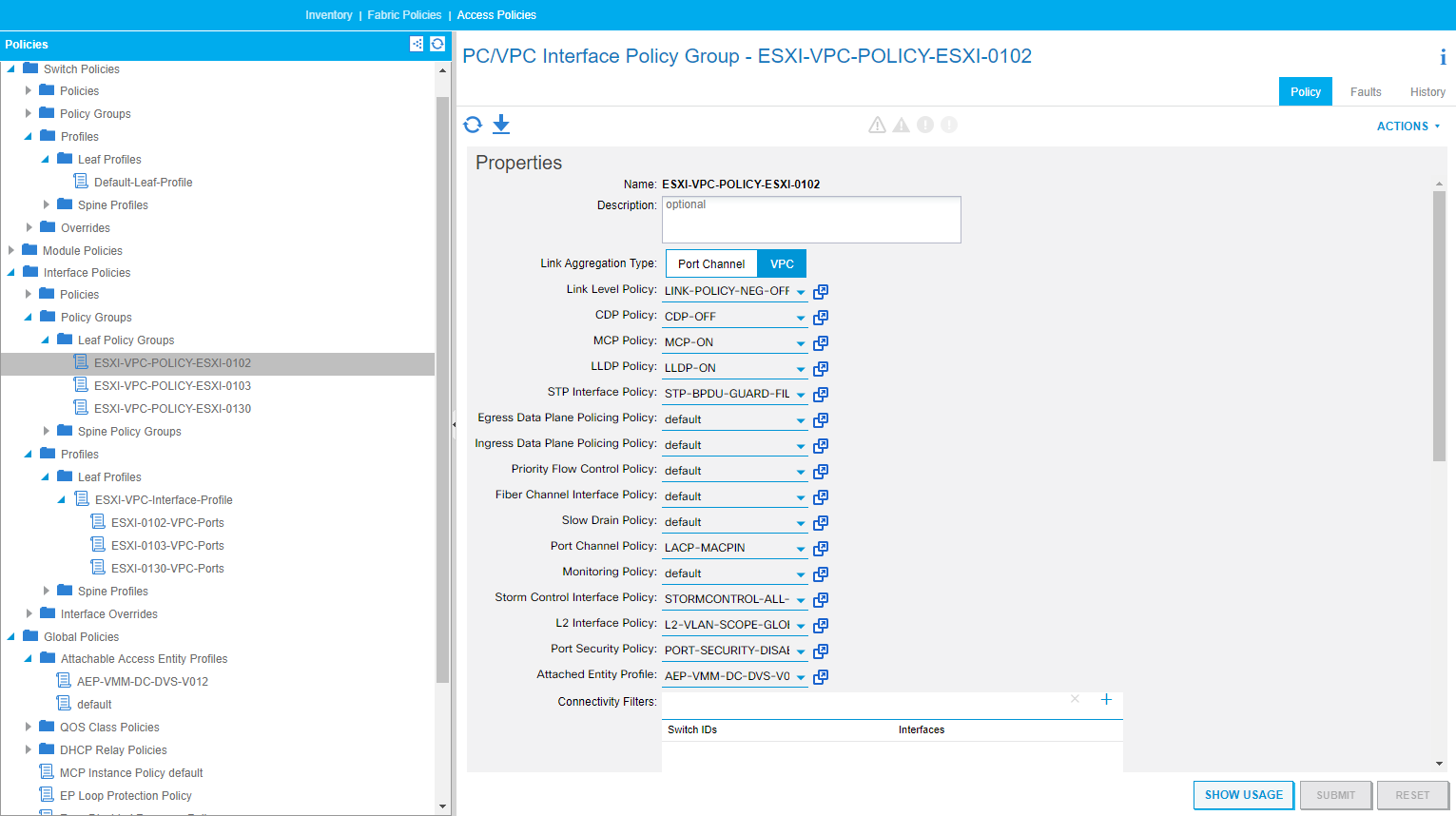

Interface Policy Group

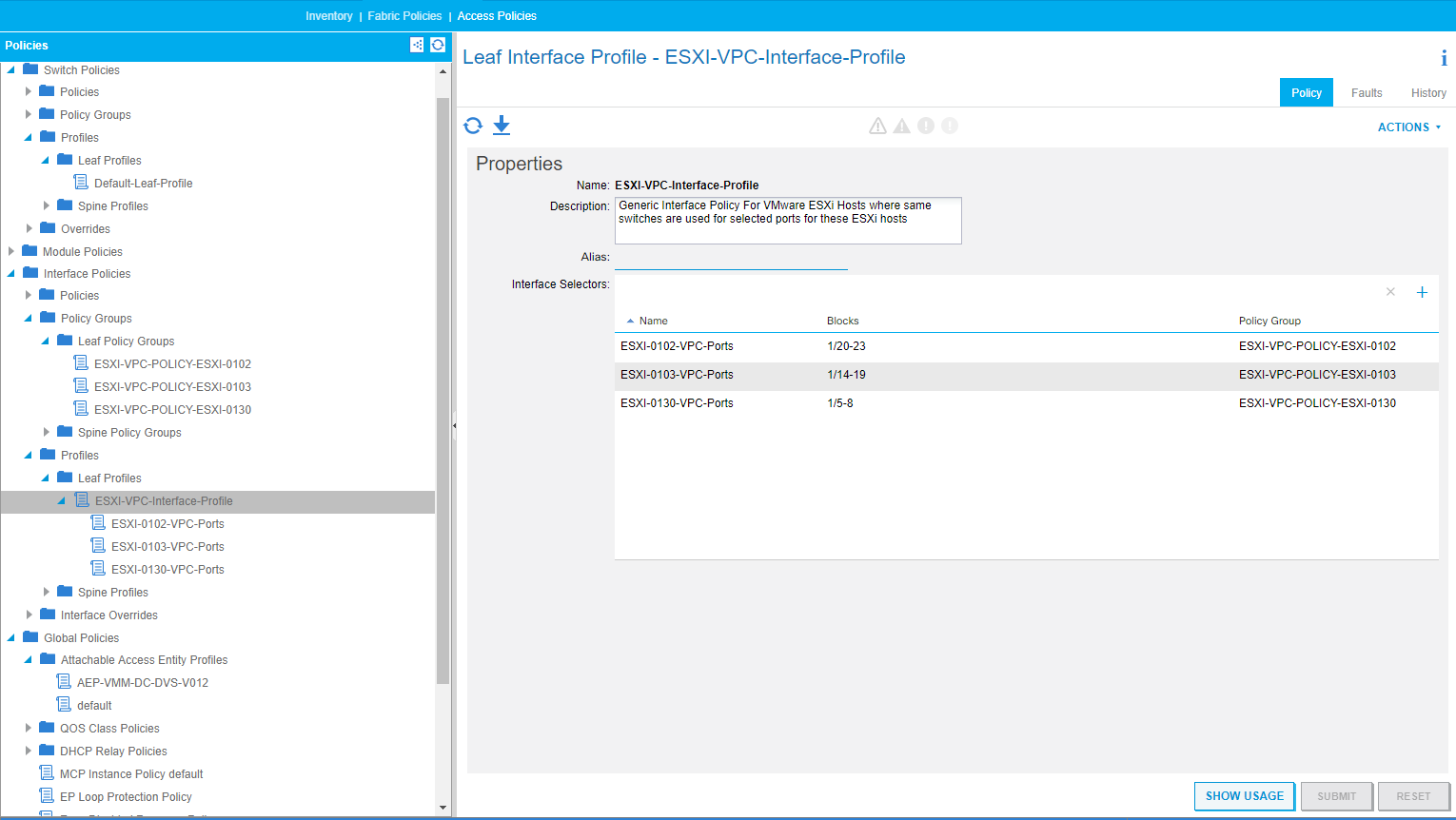

Leaf Interface Profile

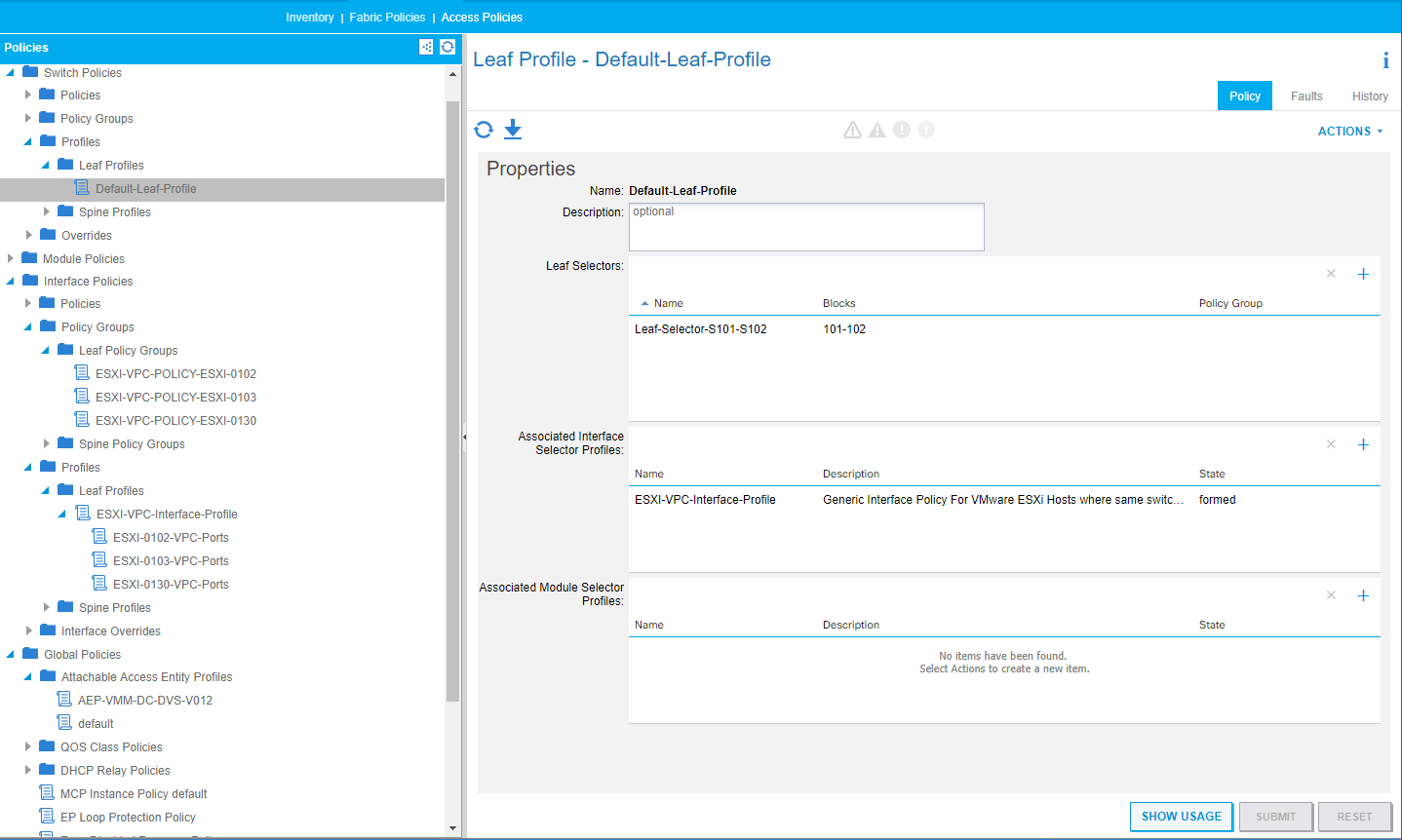

Leaf Profile

We have used the three different methods to run the Ansible playbook with the successful results shown in the above screen shots from the APIC GUI. This code is used as a baseline for more complex scripts for customers requirements; e.g. a this could be extended to configure vCenter to add the new hosts to vCenter therefore providing a single script that configures an ESXi host on vCenter & ACI.