ACI & Kubernetes – The Calico K8s CNI & MetalLB (Part One)

In contrast to the Cisco ACI CNI (read about this here), the Calico CNI does not integrate with ACI but this is certainly not a negative, Calico and MetalLB have a lot to offer and to emulate some of the good parts of the Cisco CNI is not beyond anything too difficult. On this page we discuss the use of ACI, Calico and MetalLB, with a follow up here looking at the benefits and how we could improve integration with a bit of code.

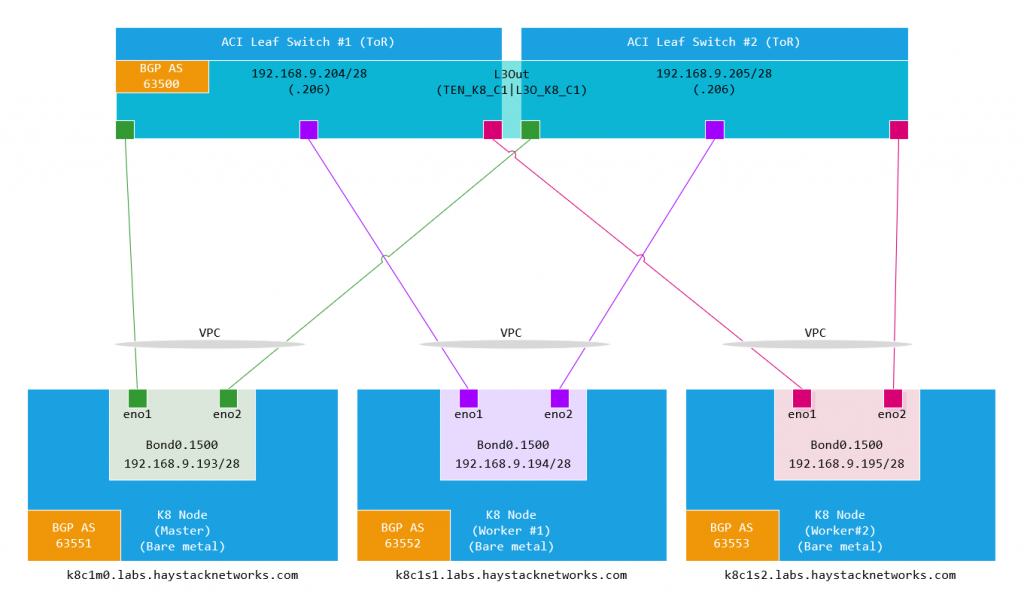

in this build we have 3 bare metal hosts (HP DL360’s) connected redundantly to a pair of ACI fabric leaf switches. The hosts are running CentOS7 and we are running ACI 5.2(2f). We will go through the build and configuration with a custom application and K8 dashboard application.

Design

Firstly we need to cover a few things off like VLAN ID’s & IP addressing for the various networks we need. We have the following;

| Network | Description/Notes | VLAN ID | IP Network |

| K8 Node Subnet / ACI Leaf SVI | The K8 node interfaces and ACI leaf switch interfaces network | 1500 | 192.168.9.192/28 |

| Docker Default Bridge IP Range | Docker Bridge Internal Container IP Range | – | 172.17.0.0/16 |

| a. K8 Pod IP Range b. Calico Default IPv4 Pool | a. K8 Pod IP Assignment Range b. CALICO_IPV4POOL_CIDR | – – | 172.27.0.0/16 |

| K8 Cluster IP Range | K8 Internal Cluster IP for Application Services | 172.28.0.0/16 | |

| K8 / MetalLB Load Balancer IP Range | External IP Range for App Service of ‘type: LoadBalancer’ | – | 172.29.0.0/16 |

The Docker IP range is the default and we don’t do anything with this. Its the same on each docker deployment and in the table to be complete so we know what networks we are working with. It wont be advertised out but can cause routing issues if outbound traffic is destined to an address in this range where its outside of the node. If you have this situation, you can modify the docker ‘/etc/docker/daemon.json’ file with key ‘bip’ to change and restart docker.

{

"exec-opts": ["native.cgroupdriver=systemd"],

"storage-driver": "overlay2",

"bip": "172.16.99.1/16"

}The K8 Pod IP Range is the IP range the K8 Pods are assigned an IP address from (each Pod gets its own unique IP address), but we must also adjust the Calico default IP Pool to be the same or a range within the K8 Pod IP Range. The Calico default range also must be changed if you have K8 node interfaces in the 192.168.0.0/16 range, this can cause conflicts and therefore either the interface address need to be changed or the CALICO_IPV4POOL_CIDR range.

The K8 Cluster IP Range is for services created in K8, an IP from this range is assigned to a deployment of a service and represents the deployment cluster wide but this address is only generally only accessible from within the K8 cluster.

The K8 / MetalLB LB range is for a deployments service where a type of LoadBalancer has been defined, this IP address will be assigned by MetalLB, but will be advertised by Calico with BGP. More on this later.

Finally, the node subnet(s). You may have noticed that I have a /28 for the leaf SVI side and the 3 K8 nodes, you would usually want to use a /29 per ‘leaf pair/ k8 node’pair. I have a /28 as I have been working with different topologies and happen to have this one already setup as I write this so a single bridge domain and IP subnet. A secondary address has been assigned to each leaf switch to provide a redundant next hop for the K8 nodes. The K8 nodes have the default gateway set as this, once BGP is up, its not needed and we could have just pointed the gateway to one of the leaf IP addresses. BGP is using next-hop-self and peering using connected interfaces.

So we can draw this out like…

Setup

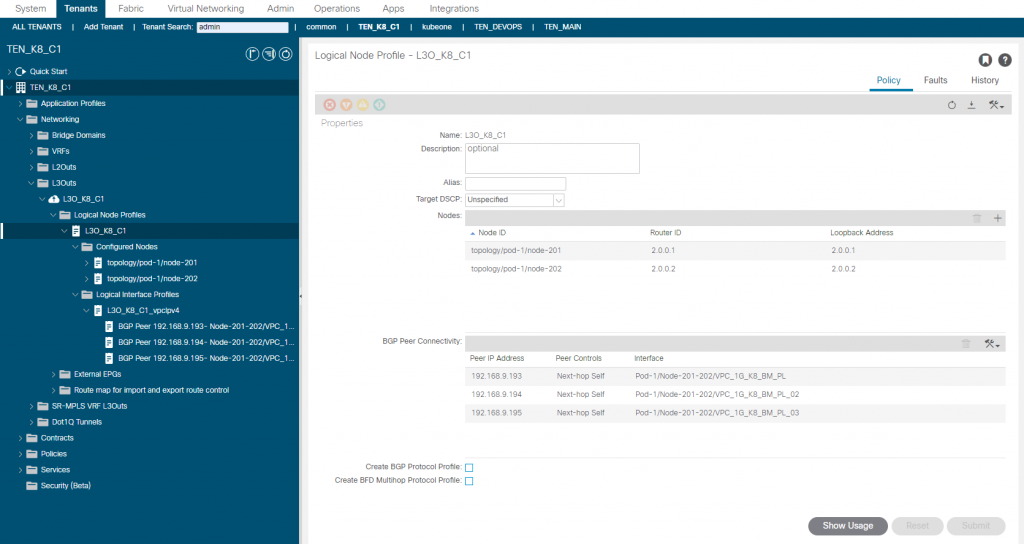

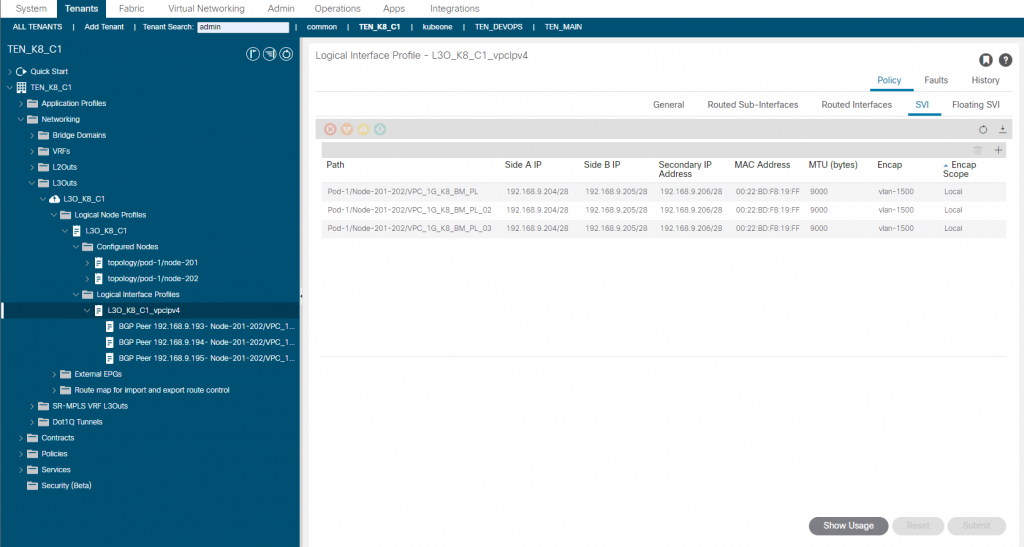

This deployment will have a dedicated ACI Tenant (TEN_K8_C1) where the L3Out (L3O_K8_C1) will be configured. We configure each node as a SVI connected to the L3Out, so we follow the usual steps to create the Interface Policy Groups (SVI), attach to AEP, Leaf and Port Selectors. We also create a dedicated Layer 3 Domain and VLAN pool for this purpose. I am also setting the IPG ‘L2 Interface Policy’ to Port Local to avoid conflicts but keep an eye on scaling if you do this for all IPG’s.

ACI L3Out

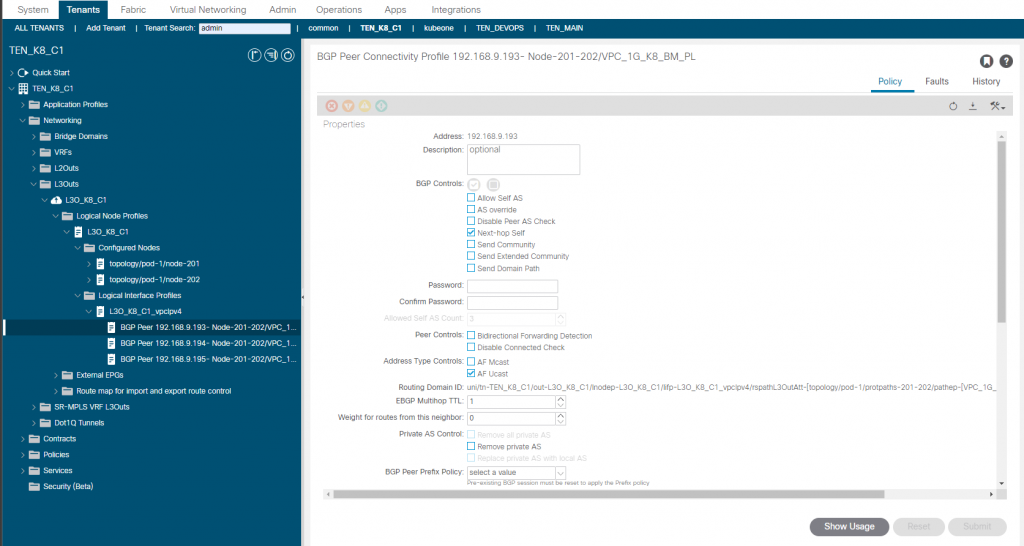

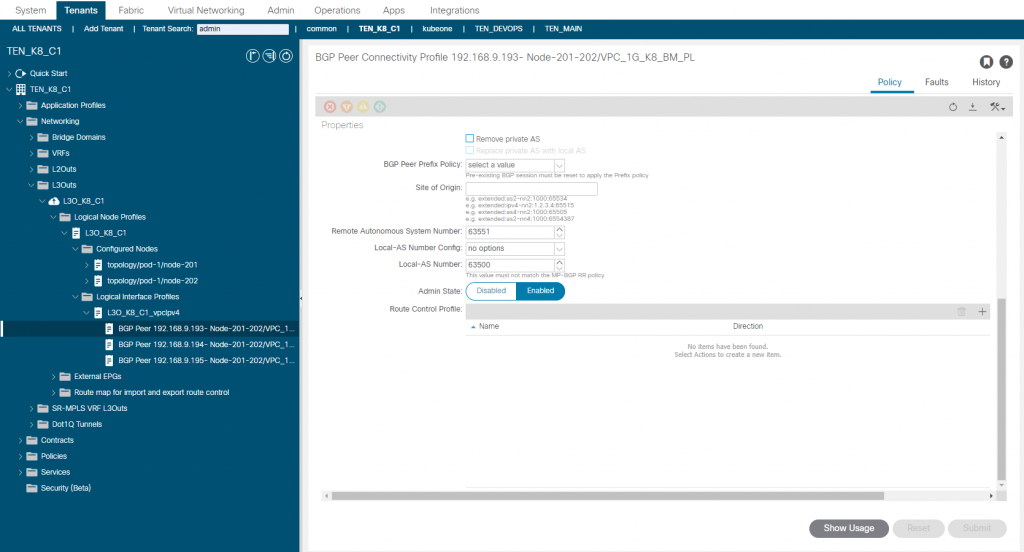

For the L3out we enable BGP, configure the leaf switches ID and loopbacks, create the SVI’s and setup the BGP options as follows;

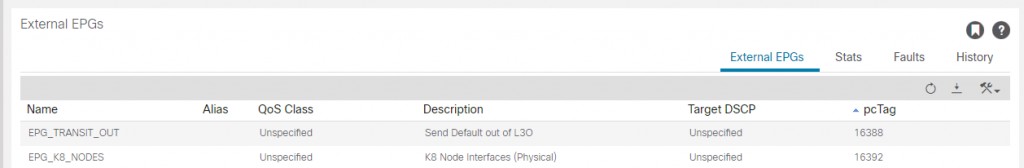

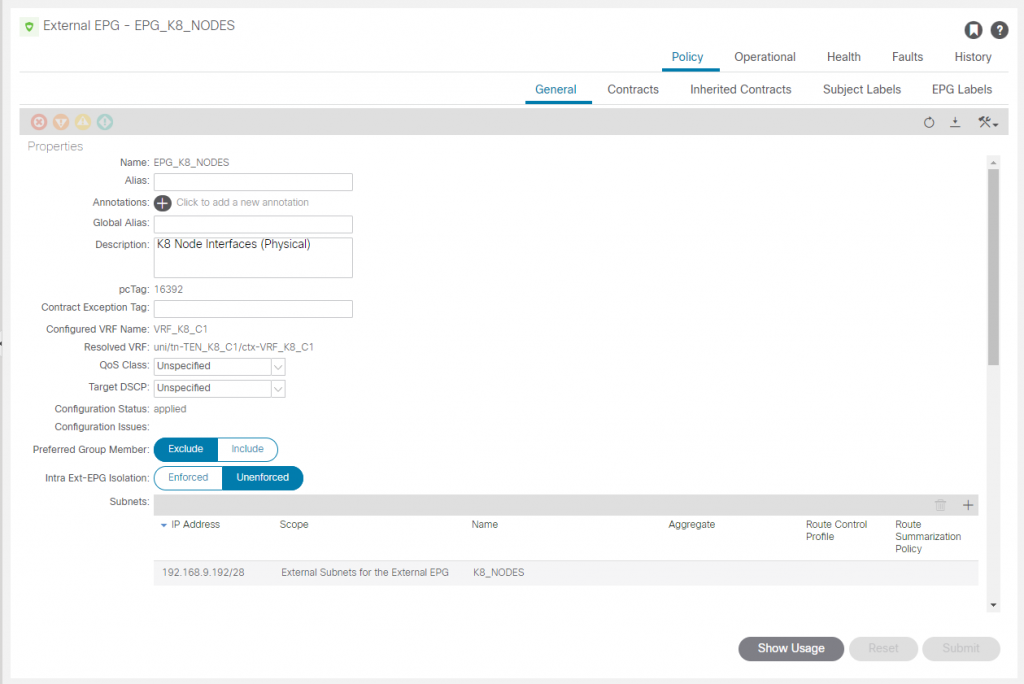

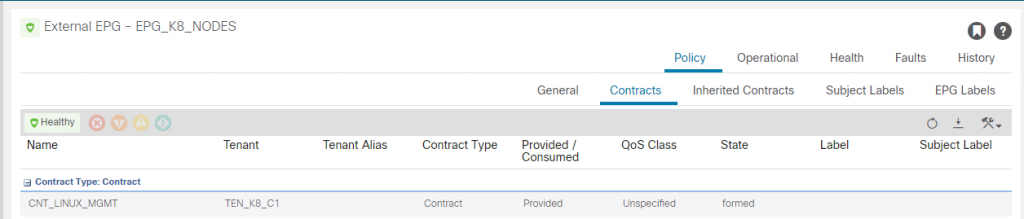

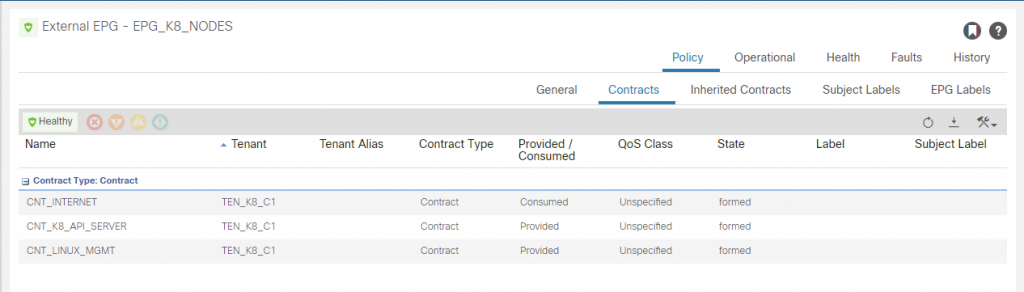

We will create an L3Out EEPG (EPG_K8_NODES) for the K8 hosts for the 192.168.5.192/28 network. There are more we need to create but creating this one will allow us to SSH to the hosts once we bring the interfaces up.

The ‘CNT_LINUX_MGMT’ has SSH included so we can access the K8 hosts. We need to consume this from where ever you will be accessing the hosts from. In my case I am on a desktop outside of the fabric, this tenant will have a dedicated L3out (L3O_EXTERNAL) for routing outside of the fabric. That L3Out will consume this contract so access to the nodes is possible.

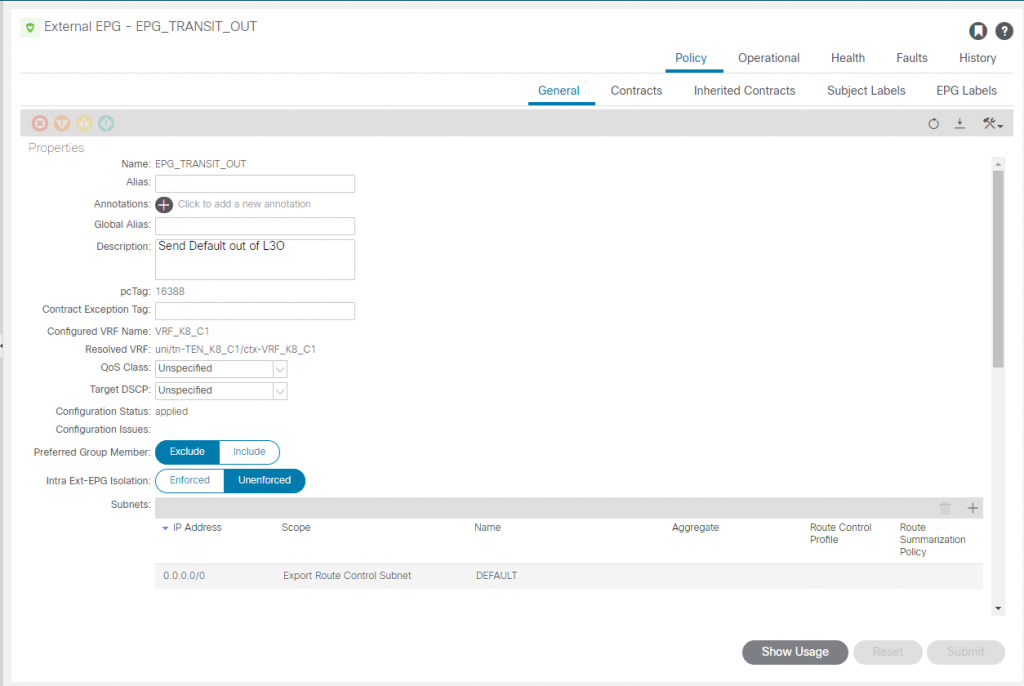

This is a transit routing configuration L3Out <> L3Out, so recall we need to mark networks for export as well as the usual marking on import to a specific EEPG. We will also export the default (0/0) received from the L3O_EXTERNAL out of the K8 L3Out (L3O_K8_C1) via BGP to the hosts.

For the L3Out (L3O_K8_C1), we add a second EEPG called (EPG_TRANSIT_OUT) marking the network 0/0 for export (out of this L3Out) via BGP.

We know need to configure the L3Out to outside the fabric. There is already an external router connected to the fabric providing a dot1q interface, each VLAN for a different tenant, so we will use this, the router already has a dot1q interface configured in VLAN 108 and OSPF area 108 configured too, just the ACI side to do in the new L3Out ‘L3O_EXTERNAL). This L3Out will be in the same VRF as the K8 L3Out.

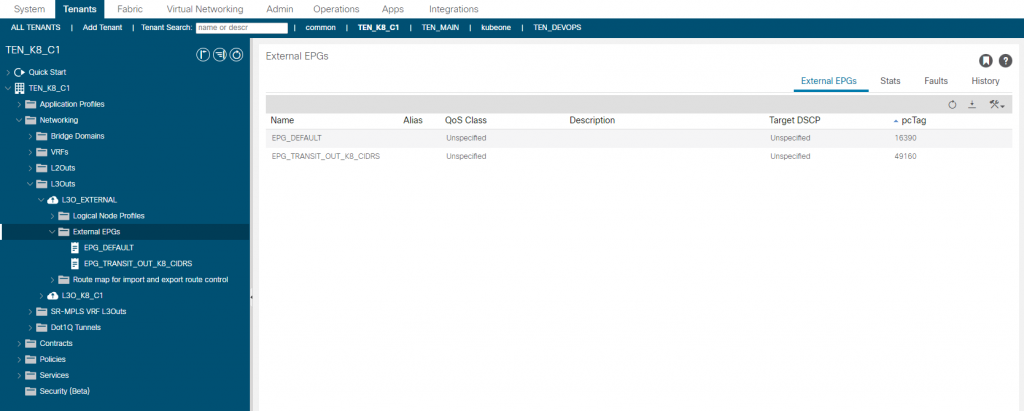

I wont go through the creation of the L3Out again, only that I want to cover the EEPG’s created.

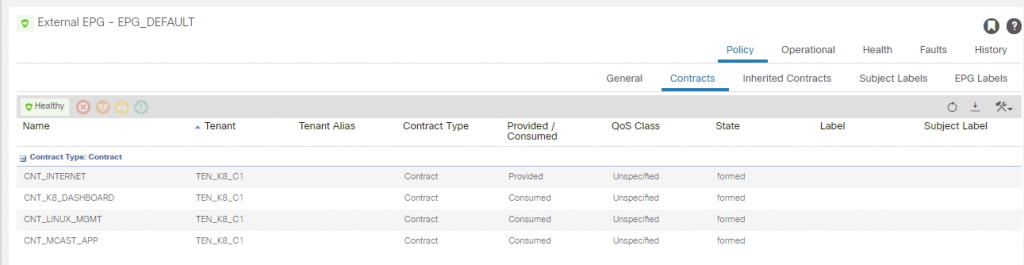

The EPG_DEFAULT is as you would guess, its a 0/0 with ‘import-security’ control marked and the CNT_LINUX_MGMT contract consumed. It also provides a contract called CNT_INTERNET, which provides access for the usual protocols (e.g. http, https, ssh, icmp, etc). This can be consumed by the EEPG EPG_K8_NODES to provide internet access outbound for them.

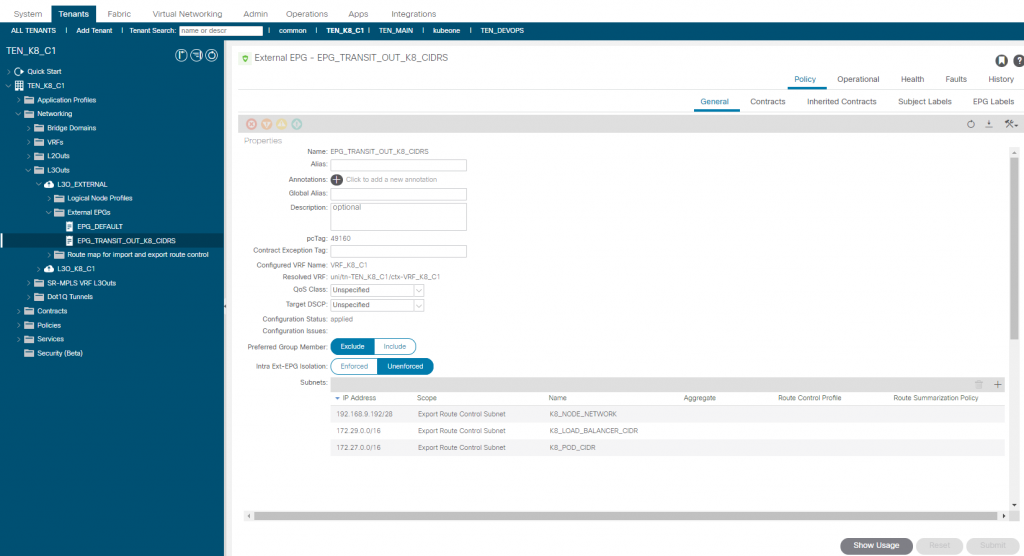

The EEPG ‘EPG_TRANSIT_OUT_K8_CIDRS’ has no contracts, but is configured to mark the networks to be exported via OSPF for K8 nodes, K8 load balancer and K8 Pod CIDRs. More on these later but right now the important one is the node network 192.168.9.192/28.

K8 Node Interfaces

The interface configuration below is from the master node, the workers are the same with the obvious IP address changes. Ensure that you have the right modules loaded for bonding and 802.1Q first.

1. Check modules are loaded

modinfo bonding

modinfo 8021q

2. Load modules if need be

modprobe --first-time bonding

modprobe --first-time 8021q

3. After configuration of network interfaces (below) then

nmcli con reload

systemctl restart network# ifcfg-eno1

TYPE=Ethernet

BROWSER_ONLY=no

BOOTPROTO=none

NAME=eno1

DEVICE=eno1

ONBOOT=yes

MASTER=bond0

SLAVE=yes

NM_CONTROLLED="no"

MTU=9000

# ifcfg-eno2

TYPE=Ethernet

BROWSER_ONLY=no

BOOTPROTO=none

NAME=eno2

DEVICE=eno2

ONBOOT=yes

MASTER=bond0

SLAVE=yes

NM_CONTROLLED="no"

MTU=9000# ifcfg-bond0

DEVICE=bond0

NAME=bond0

TYPE=Bond

BONDING_MASTER=yes

ONBOOT=yes

BOOTPROTO=none

BONDING_OPTS="mode=4 miimon=1000 min_links=1 updelay=2400"

NM_CONTROLLED="no"

MTU=9000# ifcfg-bond0.1500

DEVICE=bond0.1500

BOOTPROTO=none

ONBOOT=yes

IPADDR=192.168.9.193

PREFIX=28

GATEWAY=192.168.9.206

DNS1=192.168.178.246

DNS2=8.8.8.8

DOMAIN=labs.haystacknetworks.com

VLAN=yes

NM_CONTROLLED="no"K8 Node Additional Configuration

The following steps outline the process to get the all the nodes (master and workers) up and ready with K8s installed but not running yet.

1. Disable FW (iptables is used instead)

systemctl stop firewalld

systemctl disable firewalld

2. Enable netfilter

cat <<EOF > /etc/modules-load.d/k8s.conf

br_netfilter

EOF

modprobe br_netfilter

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

3. Disable SELinux

sudo setenforce 0

sudo sed -i ‘s/^SELINUX=enforcing$/SELINUX=permissive/’ /etc/selinux/config

4. Disable SWAP

sudo sed -i '/swap/d' /etc/fstab

sudo swapoff -a

5. Setup Docker Daemon File

mkdir -p /etc/docker

cat <<EOF > /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"storage-driver": "overlay2"

}

EOF

5. Install Docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum install -y docker-ce-20.10.6

systemctl start docker

systemctl enable docker

systemctl status docker

6. Install K8s

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet-1.21.0-0 kubeadm-1.21.0-0 kubectl-1.21.0-0

systemctl enable kubelet

systemctl start kubelet

systemctl status kubeletAt this point we should have connectivity between the network and k8 node interfaces, BGP wont be up yet as we have to initialise K8s then install Calico.

K8s Initialization

K8s initilization on the master is basic with the standard init command including the Pod and Cluster CIDR’s.

kubeadm init --pod-network-cidr=172.27.0.0/16 --service-cidr=172.28.0.0/16and with the outputs of the init command on the master, on each worker node, join the worker nodes to the master.

kubeadm join 172.20.0.10:6443 --token xxxxxxxxx --discovery-token-ca-cert-hash sha256:xxxxxxxxCalico Installation

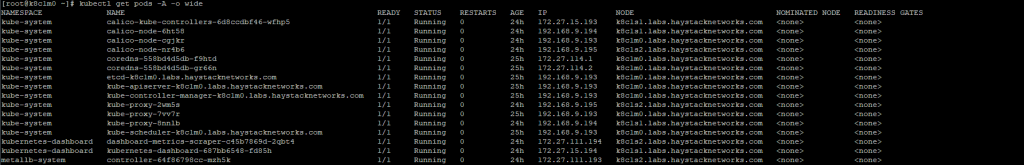

Calico will deploy a controller pod in the kube-system namespace on any available node, then a node pod on each K8 node including the master in the kube-system namespace as shown below.

There are a number of configuration files to use, primarily the manifest to install Cailco, the remaining configuration files are consumed by Calico itself with the Calico CLI tool ‘calicoctl’.

The K8 manifest is a large file, a link to a Git repo with all these files in in provided in the references section below. The main points in terms of what to modify in this file are as follows:

--

# Source: calico/templates/calico-config.yaml

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Typha is disabled.

typha_service_name: "none"

# Configure the backend to use.

calico_backend: "bird"

Ensure the calico_backend is bird, this is a BGP daemon for Linux and is what Calico uses for BGP connections to the ACI fabric.

kind: DaemonSet

apiVersion: apps/v1

spec:

template:

spec:

containers:

- name: calico-node

env:

- name: CALICO_IPV4POOL_CIDR

value: "172.27.0.0/16"

As discussed in the design section, if you need/want the default Calico IPv4 Pool to be anything other than 192.168.0.0/16, then uncomment the ‘CALICO_IPV4POOL_CIDR ‘key and value and change the network to the one you want. The most recent version of the file from the official Calico site is https://docs.projectcalico.org/manifests/calico.yaml. This specifically is for 50 nodes or less and does not use the etcd as a datastore.

This file needs to be applied to K8s with the usual command and use the file name you have given to the file.

kubectl apply -f ./01-calico.yamlAs I have a few files to use for installation, they all have a ordered prefix. The list of the remaining files we will apply using calicoctl are listed below.

# Apply using kubectl

-rw-r--r-- 1 root root 189406 Oct 22 16:27 01-calico.yaml

# Apply using calicoctl

-rw-r--r-- 1 root root 219 Apr 20 2021 02-ip_pool_NO_nat_NO_ipip.yaml

-rw-r--r-- 1 root root 687 Apr 20 2021 03-bgpconfig.yaml

-rw-r--r-- 1 root root 735 Apr 28 11:07 04-calico-k8c1m0-bgp.yaml

-rw-r--r-- 1 root root 733 Apr 20 2021 05-calico-k8c1s1-bgp.yaml

-rw-r--r-- 1 root root 733 Apr 20 2021 06-calico-k8c1s2-bgp.yaml

We need to install calicoctl, this can be done on any host that has access to the K8 nodes and also have the kubeconfig file locally. For this I have installed calicoctl on the master. There are a few different options but I am just installing the binary in a existing system environment path. For all options take a look at Install calicoctl (projectcalico.org).

curl -o calicoctl -O -L "https://github.com/projectcalico/calicoctl/releases/download/v3.20.2/calicoctl"

chmod +x calicoctlWe make some changes to the IP Pool we have set in the first calico configuration file. Using this configuration we can add additional pools for other scenarios such as static assignment and other transports, and also modify the default one. We will make sure here that the default pool we have set is configured for no overlay and therefore disable IP-in-IP and VXLAN and specify the assignment block size to /26. The content of the file 02-ip_pool_NO_nat_NO_ipip.yaml is:

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: default-ipv4-ippool

spec:

blockSize: 26

cidr: 172.27.0.0/16

ipipMode: Never

natOutgoing: false

nodeSelector: all()

vxlanMode: Never

We apply this file as follows:

calicoctl apply -f ./02-ip_pool_NO_nat_NO_ipip.yamlNext we need to configure the BGP process on Calico, this is a basic configuration file where we are setting the CIDR for the LoadBalancer assignments to be assigned by MetalLB.

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

name: default

spec:

logSeverityScreen: Info

nodeToNodeMeshEnabled: false

# Provided via LoadBalancer (MetalLB), allocated via service file

# and ip in service file from external LB or auto/orch app

serviceLoadBalancerIPs:

- cidr: 172.29.0.0/16

We apply this file as follows:

calicoctl apply -f ./03-bgpconfig.yamlFinally we apply the files specific to each K8 node for the BGP configuration. Each file provides the node specific details like BGP AS and peering addresses. This is again applied by using calicoctl and we will apply each file on the master. You do not have to run calicoctl on worker node #2 to apply the worker #2 node configuration. The node the configuration applies to is stated in the file. Below is the file for the master node, other nodes are similar and on the Git repo given at the end of this page.

#

# K8 Master Node #1.

#

---

# Node k8c1m0

apiVersion: projectcalico.org/v3

kind: Node

metadata:

name: k8c1m0.labs.haystacknetworks.com

spec:

bgp:

# K8 Node IP/AS

ipv4Address: 192.168.9.193/28

asNumber: 63551

---

# ACI Leaf 1

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: leaf201-k8c1m0

spec:

# ACI Leaf IP/AS

peerIP: 192.168.9.204

asNumber: 63500

# Apply to K8 Node

node: k8c1m0.labs.haystacknetworks.com

---

# ACI Leaf 2

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: leaf202-k8c1m0

spec:

# ACI Leaf IP/AS

peerIP: 192.168.9.205

asNumber: 63500

# Apply to K8 Node

node: k8c1m0.labs.haystacknetworks.com

Again, we apply these files as follows:

calicoctl apply -f ./04-calico-k8c1m0-bgp.yaml

calicoctl apply -f ./05-calico-k8c1s1-bgp.yaml

calicoctl apply -f ./06-calico-k8c1s2-bgp.yamlOk, lets pause to see where we are, there is a lot of time taken up there doing this manually, Ansible or Terraform is your friend here but for our purposes its easier to drill through this in this way.

The current state should be that we have:

- The K8 nodes connected to the ACI leaf switches

- ACI SVI interfaces configured with the L3Outs and BGP running on those interfaces.

- K8 nodes interfaces configured and in an up state

- At this point, the directly connected interfaces should be able to ping each other between ACI interfaces and node interfaces.

- We also have Calico running BGP, we should see that BGP peers are connected, let check this from both sides.

BGP Validation

Calicoctl gives us configuration and operational data as you see in the following outputs.

[root@k8c1m0 ~]# calicoctl get bgpPeer

NAME PEERIP NODE ASN

leaf201-k8c1m0 192.168.9.204 k8c1m0.labs.haystacknetworks.com 63500

leaf201-k8c1s1 192.168.9.204 k8c1s1.labs.haystacknetworks.com 63500

leaf201-k8c1s2 192.168.9.204 k8c1s2.labs.haystacknetworks.com 63500

leaf202-k8c1m0 192.168.9.205 k8c1m0.labs.haystacknetworks.com 63500

leaf202-k8c1s1 192.168.9.205 k8c1s1.labs.haystacknetworks.com 63500

leaf202-k8c1s2 192.168.9.205 k8c1s2.labs.haystacknetworks.com 63500

[root@k8c1m0 ~]# calicoctl get bgpConfiguration

NAME LOGSEVERITY MESHENABLED ASNUMBER

default Info false -

[root@k8c1m0 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------+---------------+-------+----------+-------------+

| 192.168.9.204 | node specific | up | 13:25:39 | Established |

| 192.168.9.205 | node specific | up | 13:25:39 | Established |

+---------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

So to get more detailed information on the BGP sessions from Calico requires us to use a shell session on each Calico node Pod, we will take K8 node #2 here as the example.

kubectl exec -i -t -n kube-system calico-node-nr4b6 /bin/bashCalico uses a forked version of bird (projectcalico/bird: Calico’s fork of the BIRD protocol stack (github.com)), and we can use the CLI tool ‘birdcl’. If your coming from a Cisco world, here is a sample of Cisco to Bird commands – these are from the following site (Command_interface_examples · Wiki · labs / BIRD Internet Routing Daemon · GitLab (nic.cz)).

show ip route -- show route [table XXX]

show ip route bgp -- show route [table XXX] protocol <protocol_name> (show route proto ospf2 )

show ip route 1.2.0.0 longer- -- show route where net ~ 1.2.0.0/16

show ip bgp 1.2.0.0 -- show route where net ~ 1.2.0.0/16 all

show ip bgp sum -- show protocols

show ip bgp neighbors 1.2.3.4 -- show protocols all <protocol_name> (show protocols all ospf2)

show ip bgp neighbors 1.2.3.4 advertised-routes -- show route export <protocol_name>

clear ip bgp 1.2.3.4 -- reload <protocol_name> [in/out]

show ip route summary -- show route [table XXX] countIf we enter the bird CLI and run the ”show protocols all” command. We should have established peerings with the ACI leaf nodes on the interface addresses 192.168.9.204 (Leaf1) and 192.168.9.250 (Leaf2)

We will connect to the Calico node Pod running on worker #2 from the master.

[root@k8c1m0 ~]# kubectl exec --stdin --tty -n kube-system calico-node-nr4b6 calico-node -- /bin/bash

Defaulted container "calico-node" out of: calico-node, upgrade-ipam (init), install-cni (init), flexvol-driver (init)

[root@k8c1s2 /]# birdcl

BIRD v0.3.3+birdv1.6.8 ready.

bird> show protocols all

name proto table state since info

static1 Static master up 2021-10-22

Preference: 200

Input filter: ACCEPT

Output filter: REJECT

Routes: 3 imported, 0 exported, 3 preferred

Route change stats: received rejected filtered ignored accepted

Import updates: 3 0 0 0 3

Import withdraws: 0 0 --- 0 0

Export updates: 0 0 0 --- 0

Export withdraws: 0 --- --- --- 0

kernel1 Kernel master up 2021-10-22

Preference: 10

Input filter: ACCEPT

Output filter: calico_kernel_programming

Routes: 5 imported, 4 exported, 4 preferred

Route change stats: received rejected filtered ignored accepted

Import updates: 5 0 0 0 5

Import withdraws: 0 0 --- 0 0

Export updates: 34 17 2 --- 15

Export withdraws: 3 --- --- --- 12

device1 Device master up 2021-10-22

Preference: 240

Input filter: ACCEPT

Output filter: REJECT

Routes: 0 imported, 0 exported, 0 preferred

Route change stats: received rejected filtered ignored accepted

Import updates: 0 0 0 0 0

Import withdraws: 0 0 --- 0 0

Export updates: 0 0 0 --- 0

Export withdraws: 0 --- --- --- 0

direct1 Direct master up 2021-10-22

Preference: 240

Input filter: ACCEPT

Output filter: REJECT

Routes: 2 imported, 0 exported, 2 preferred

Route change stats: received rejected filtered ignored accepted

Import updates: 3 0 0 0 3

Import withdraws: 1 0 --- 0 1

Export updates: 0 0 0 --- 0

Export withdraws: 0 --- --- --- 0

Node_192_168_9_204 BGP master up 2021-10-22 Established

Description: Connection to BGP peer

Preference: 100

Input filter: ACCEPT

Output filter: calico_export_to_bgp_peers

Routes: 3 imported, 3 exported, 2 preferred

Route change stats: received rejected filtered ignored accepted

Import updates: 3 0 0 0 3

Import withdraws: 0 0 --- 0 0

Export updates: 27 4 17 --- 6

Export withdraws: 0 --- --- --- 9

BGP state: Established

Neighbor address: 192.168.9.204

Neighbor AS: 63500

Neighbor ID: 2.0.0.1

Neighbor caps: refresh restart-aware AS4

Session: external multihop AS4

Source address: 192.168.9.195

Hold timer: 119/180

Keepalive timer: 14/60

Node_192_168_9_205 BGP master up 2021-10-22 Established

Description: Connection to BGP peer

Preference: 100

Input filter: ACCEPT

Output filter: calico_export_to_bgp_peers

Routes: 3 imported, 3 exported, 1 preferred

Route change stats: received rejected filtered ignored accepted

Import updates: 3 0 0 0 3

Import withdraws: 0 0 --- 0 0

Export updates: 27 4 17 --- 6

Export withdraws: 0 --- --- --- 9

BGP state: Established

Neighbor address: 192.168.9.205

Neighbor AS: 63500

Neighbor ID: 2.0.0.2

Neighbor caps: refresh restart-aware AS4

Session: external multihop AS4

Source address: 192.168.9.195

Hold timer: 100/180

Keepalive timer: 2/60

bird>

We have established BGP peers between the K8 Calico node and ACI leaf switches. I have also highlighted the outbound BGP filter name ‘calico_export_to_bgp_peers’. This of course is what BGP will advertise out to its peers (ACI). Lets look at each of the K8 nodes, and connect to the calico-node Pod running on each to see what the outbound BGP filters are showing.

# This is on the Calico Node Pod On The Master

bird> show route filter calico_export_to_bgp_peers

172.27.114.0/26 blackhole [static1 2021-10-22] * (200)

172.29.0.0/16 blackhole [static1 2021-10-22] * (200)

bird># This is on the Calico Node Pod On Worker #1

bird> show route filter calico_export_to_bgp_peers

172.27.15.192/26 blackhole [static1 2021-10-22] * (200)

172.29.0.2/32 blackhole [static1 2021-10-22] * (200)

172.29.0.0/16 blackhole [static1 2021-10-22] * (200)

172.29.0.1/32 blackhole [static1 2021-10-22] * (200)

bird># This is on the Calico Node Pod On Worker #2

bird> show route filter calico_export_to_bgp_peers

172.27.111.192/26 blackhole [static1 2021-10-22] * (200)

172.29.0.2/32 blackhole [static1 2021-10-22] * (200)

172.29.0.0/16 blackhole [static1 2021-10-22] * (200)

bird>We can see that the 172.29.0.0/16 network we configured for the LoadBalancer IP assignments is advertised from all the Calico nodes. We have two /32 host routes in that range (172.29.0.1/32 and 172.29.0.2/32), these are IP addresses assigned by MetalLB for existing services (K8 Dashboard and my mcast app), more on this later after we install MetalLB 🙂

Lets look at the route table on the master Calico node and we can see routes received via BGP from the ACI fabric.

bird> show route

0.0.0.0/0 via 192.168.9.205 on bond0.1500 [Node_192_168_9_205 2021-10-22] ! (100/0) [AS65000?]

via 192.168.9.204 on bond0.1500 [Node_192_168_9_204 2021-10-22] (100/0) [AS65000?]

via 192.168.9.206 on bond0.1500 [kernel1 2021-10-22] (10)

169.254.0.0/16 dev bond0 [kernel1 2021-10-22] * (10)

192.168.9.192/28 dev bond0.1500 [direct1 2021-10-22] * (240)

10.243.176.0/24 via 192.168.9.204 on bond0.1500 [Node_192_168_9_204 2021-10-22] * (100/0) [AS65000?]

via 192.168.9.205 on bond0.1500 [Node_192_168_9_205 2021-10-22] (100/0) [AS65000?]

172.27.114.0/26 blackhole [static1 2021-10-22] * (200)

172.27.114.1/32 dev calie5500e8d8d1 [kernel1 2021-10-22] * (10)

172.27.114.2/32 dev cali047be684ea9 [kernel1 2021-10-22] * (10)

172.29.0.0/16 blackhole [static1 2021-10-22] * (200)

172.17.0.0/16 dev docker0 [direct1 2021-10-22] * (240)

172.16.1.0/24 via 192.168.9.204 on bond0.1500 [Node_192_168_9_204 2021-10-22] * (100/0) [AS65000?]

via 192.168.9.205 on bond0.1500 [Node_192_168_9_205 2021-10-22] (100/0) [AS65000?]

bird>

Taking a look at the ACI side on one of the leaf switches and checking with have established neighbors.

leaf-01-201# show bgp ipv4 unicast neighbors vrf TEN_K8_C1:VRF_K8_C1 | grep BGP

BGP neighbor is 192.168.9.193, remote AS 63551, local AS 63500, ebgp link, Peer index 2, Peer Tag 0

BGP version 4, remote router ID 192.168.9.193

BGP state = Established, up for 1d02h

BGP table version 447, neighbor version 447

BGP neighbor is 192.168.9.194, remote AS 63552, local AS 63500, ebgp link, Peer index 3, Peer Tag 0

BGP version 4, remote router ID 192.168.9.194

BGP state = Established, up for 1d02h

BGP table version 447, neighbor version 447

BGP neighbor is 192.168.9.195, remote AS 63553, local AS 63500, ebgp link, Peer index 1, Peer Tag 0

BGP version 4, remote router ID 192.168.9.195

BGP state = Established, up for 1d02h

BGP table version 447, neighbor version 447

Good, and finally looking at the route table, we can see the Pod networks and the LoadBalancer networks host addresses received via BGP. You will notice the Pod networks assigned for each node are /26 as we specified in the Calico IP Pool configuration file [blockSize: 26].

leaf-01-201# show bgp ipv4 unicast vrf TEN_K8_C1:VRF_K8_C1

BGP routing table information for VRF TEN_K8_C1:VRF_K8_C1, address family IPv4 Unicast

BGP table version is 447, local router ID is 2.0.0.1

Status: s-suppressed, x-deleted, S-stale, d-dampened, h-history, *-valid, >-best

Path type: i-internal, e-external, c-confed, l-local, a-aggregate, r-redist, I-injected

Origin codes: i - IGP, e - EGP, ? - incomplete, | - multipath, & - backup

Network Next Hop Metric LocPrf Weight Path

*>r0.0.0.0/0 0.0.0.0 1 100 32768 ?

*>r2.0.0.1/32 0.0.0.0 0 100 32768 ?

*>i2.0.0.2/32 10.0.184.64 0 100 0 ?

*>r10.243.176.0/24 0.0.0.0 0 100 32768 ?

*>r172.16.1.0/24 0.0.0.0 0 100 32768 ?

*>e172.27.15.192/26 192.168.9.194 0 63500 63552 i

* i 10.0.184.64 100 0 63500 63552 i

*>e172.27.111.192/26 192.168.9.195 0 63500 63553 i

* i 10.0.184.64 100 0 63500 63553 i

*>e172.27.114.0/26 192.168.9.193 0 63500 63551 i

* i 10.0.184.64 100 0 63500 63551 i

* e172.29.0.0/16 192.168.9.195 0 63500 63553 i

* e 192.168.9.194 0 63500 63552 i

*>e 192.168.9.193 0 63500 63551 i

* i 10.0.184.64 100 0 63500 63551 i

* i172.29.0.1/32 10.0.184.64 100 0 63500 63552 i

*>e 192.168.9.194 0 63500 63552 i

* i172.29.0.2/32 10.0.184.64 100 0 63500 63552 i

* e 192.168.9.195 0 63500 63553 i

*>e 192.168.9.194 0 63500 63552 i

* i192.168.9.192/28 10.0.184.64 0 100 0 ?

*>r 0.0.0.0 0 100 32768 ?

So at this point we have a running K8 cluster, Calico running with BGP successfully peering between ACI and Calico. We have a few things left to do before we finish.

We need to;

- Configure MetalLB as a LoadBalancer on the K8 cluster to assign and load balance incoming traffic to pods.

- Add an application to the cluster

- Configure ACI contracts to allow traffic to/from the cluster and pods.

MetalLB – K8 Load Balancer

MetalLB is a load balancer for K8s deployments on-prem (or not on a cloud platform). It defines itself as a beta project (Q3-2021) so the usual warning are in play here, but I do like it for the simplicity it provides. You can get more information on MetalLB here.

MetalLB uses BGP to advertise out the LoadBalancer IP address assignments, which was a problem with Calico in the past as Calico also uses BGP and the two conflicted when trying to get two BGP sessions on the same node creating two BGP sessions on a peer node (ACI leaf switch). This has now been resolved in that we enable BGP on MetalLB but it uses Calico BGP to advertise these via the Calico BGP session.

You can find the latest manifests links on the MetalLB site here, I am using an older version here so please check for the latest first.

First lets create a namespace for the MetalLB deployment.

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.6/manifests/namespace.yamlCreate MetalLB deployment

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.6/manifests/metallb.yamlFinally we need to apply the configuration for BGP and for the LoadBalancer CIDR block. The CIDR block is the 172.29.0.0/16 we configured in the Calico BGP configuration.

# 03-address-pool.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

# Enable BGP for MetalLB, but dont add BGP

# neighbors, AS number etc as Calico has the node

# BGP session with the leaf/tor switches.

# This works as MetalLB assigns the LB IP address

# and Calico advertises it. See following for details;

# https://github.com/projectcalico/confd/pull/422

# Address range must match Calico address range

# for serviceLoadBalancerIPs:cidr in Calico BGPConfiguration

config: |

address-pools:

- name: default

avoid-buggy-ips: true

protocol: bgp

addresses:

- 172.29.0.0/16

kubectl apply -f ./03-address-pool.yamlConfirm we have MetalLB running.

[root@k8c1m0]# kubectl get deployments -n metallb-system controller -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

controller 1/1 1 1 42h controller metallb/controller:v0.9.6 app=metallb,component=controllerSo we now have a running K8 Cluster with Calico and MetalLB connected to ACI via L3Outs using BGP for route advertisement. Next we deploy an application to see how things work.

Application Deployment

I will deploy my own multicast application, which is just a multicast sender & receiver which generates a web page for NGINX to serve containing details of other multicast sources it has seen on a given address. Calico does not support (currently Q3-2021) multicast unlike the Cisco CNI which does, see here for more details.

Lets deploy the application.

# 01-mcast.yaml

kind: Namespace

apiVersion: v1

metadata:

name: multicast

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: mcast-app

name: mcast

namespace: multicast

spec:

replicas: 2

selector:

matchLabels:

app: mcast-app

template:

metadata:

labels:

app: mcast-app

spec:

affinity:

# keep pods on separate nodes

# will fail to build a pod if no host

# is available not running this app.

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- mcast-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: mcast

image: simonbirtles/basic:mcast_basic

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

restartPolicy: Always

Apply as usual and validate we have the deployment running.

kubectl apply -f ./01-mcast.yaml[root@k8c1m0 ~]# kubectl get deployments -A

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system calico-kube-controllers 1/1 1 1 42h

kube-system coredns 2/2 2 2 42h

kubernetes-dashboard dashboard-metrics-scraper 1/1 1 1 42h

kubernetes-dashboard kubernetes-dashboard 1/1 1 1 42h

metallb-system controller 1/1 1 1 42h

multicast mcast 2/2 2 2 41h

[root@k8c1m0 ~]# kubectl get pods -n multicast -o wide

NAME READY STATUS RESTARTS AGE IP NODE

mcast-6554746948-9qwzq 1/1 Running 0 41h 172.27.111.195 k8c1s2.labs.haystacknetworks.com

mcast-6554746948-cbr79 1/1 Running 0 41h 172.27.15.195 k8c1s1.labs.haystacknetworks.com So as we can see we have two pods deployed for the mcast application. These are running on separate workers as shown in the last output and will be serving on port 80 as stated in the deployment configuration.

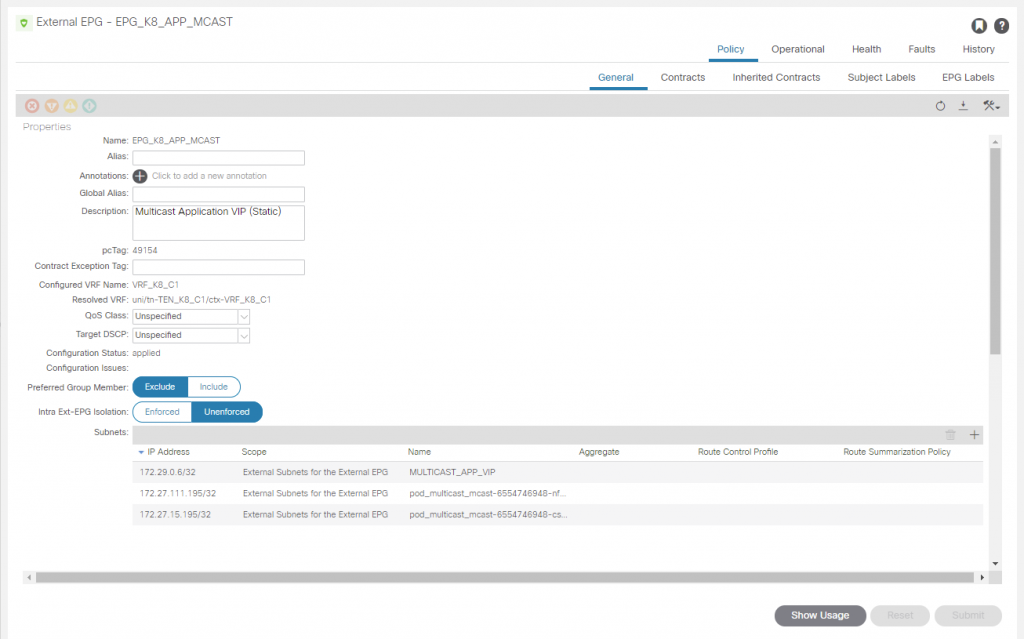

Now we deploy the service configuration for the multicast application. For the service load balancer external IP or VIP we can let MetalLB choose one from the CIDR we gave (172.29.0.0/16) or we can specify one as long as the one we specify is in the CIDR range 172.29.0.0/16 and its not being used. In this case we are going to assign one ourselves (172.16.0.6). The service configuration is as follows.

The ‘externalTrafficPolicy’ is set to ‘Local’ which means that with BGP, traffic destined for the service VIP will only be sent to nodes with running pods for this application, therefore kube-proxy does not then load balance again over the available nodes, it will only send to pods local on the k8 node that received the traffic. Another option ‘Cluster’ is available, read this to understand how MetalLB respects these options when using BGP.

# 02-mcast-service-lb-static.yml

---

kind: Service

apiVersion: v1

metadata:

name: mcast

namespace: multicast

spec:

ports:

- port: 80

targetPort: 80

selector:

app: mcast-app

type: LoadBalancer

# LoadBalancer service with the static public IP address,

loadBalancerIP: 172.29.0.6

# Local - Direct traffic to a specific node to

# be load balanced by kube-proxy to pods

# on the 'local' node only.

externalTrafficPolicy: Local

ACI EPGs & Contracts

In the L3O ‘L3O_K8_C1’ we have two EEPG’s that we created earlier. One for the association of the K8 node interfaces on the 192.168.9.192/28 network and the other an export EEPG for the default route.

K8 Nodes to Pods Communication

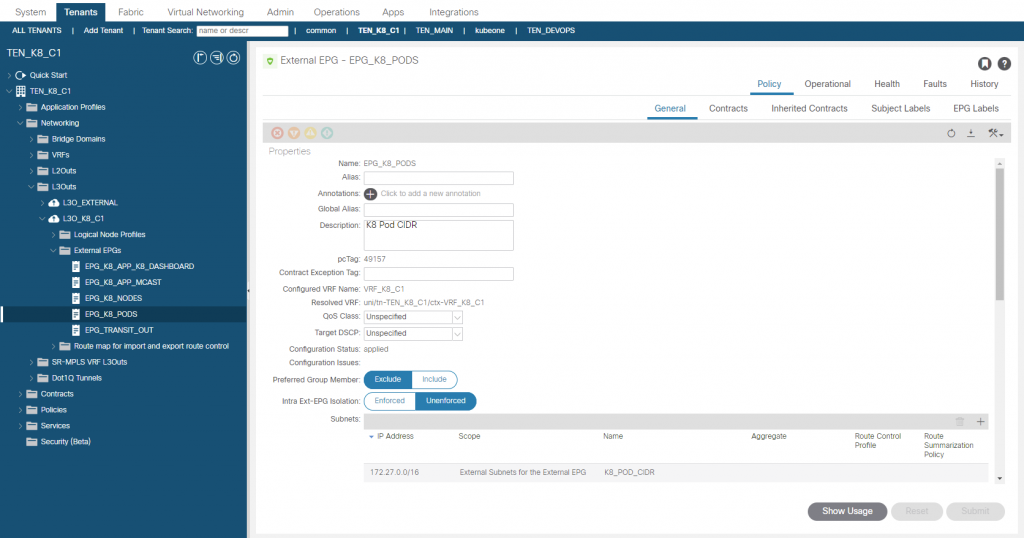

We need to add an EEPG for the K8 Pods CIDR, we need this as we have to allow the pod IP addresses to communicate with each other (intra and inter node) and allow them to communication with the node interfaces. This is one of the K8 networking rules and to explain why this is needed lets take the example of a pod with an application that needs to talk to the K8 API server. The API server (172.28.0.1:443) is on the master node, the application pod is on worker #1 node. The application will send try to send packets to the API server (172.28.0.1:443), on each K8 node iptables is configured to translate destination 172.28.0.1:443 to 192.168.9.193:6443, which is the master node physical interface and the port the API server is really running on. If we don’t permit connectivity between the node and pod networks, this would fail and applications that need this access would fail.

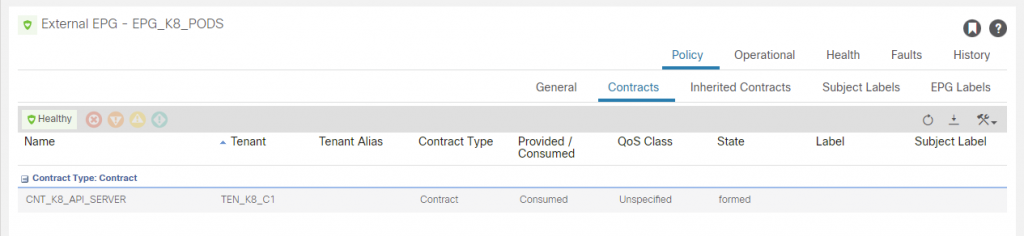

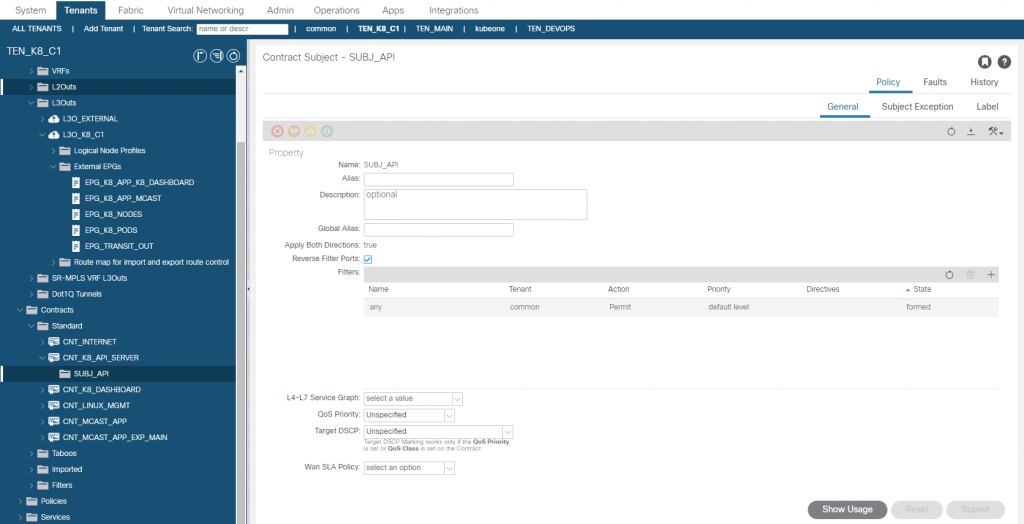

In the L3O_K8_C1 L3O, we create an EEPG called ‘EPG_K8_PODS’ and add the pod subnet CIDR (172.27.0.0/16) with ‘import-security’ (External Subnets for the External EPG), We then create a contract ‘CNT_K8_API_SERVER’ with an any permit and apply between the EPG_K8_NODES and EPG_K8_PODS EEPG’s. Note that it doesn’t matter which is provider or consumer as with an any filter and the ‘reverse filter ports’ option selected, traffic can be initiated from either direction (which is why you should be very sure you want to use an ‘any’ filter on ACI contracts!!).

You can test this by logging into a worker node and running curl to the API server. Ignore the failure status, its expected as you have not authenticated.

[root@k8c1s1 ~]# curl https://172.28.0.1:443 -k

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {

},

"code": 403

}Application Communication

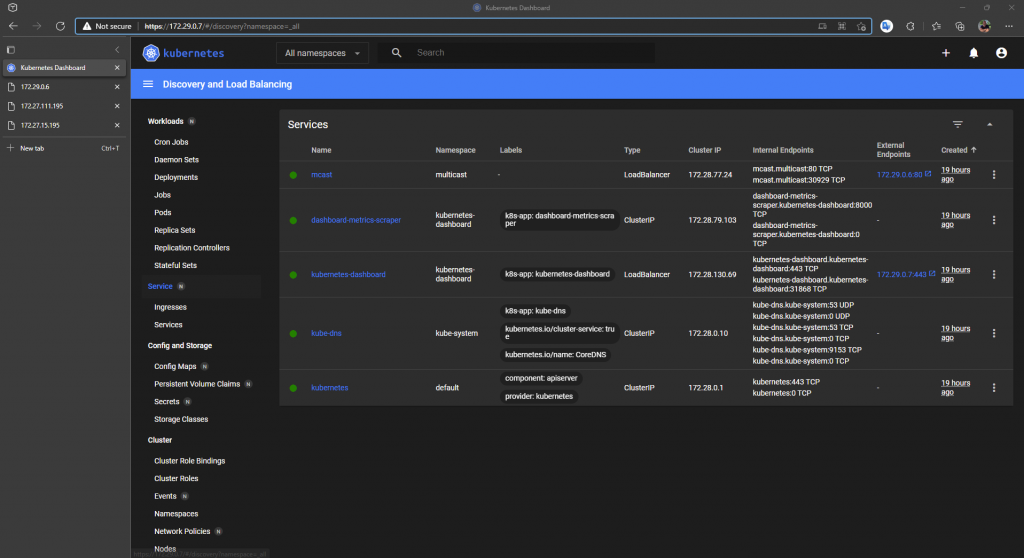

I have installed my multicast application and also the K8 dashboard which is also configured with a load balancer service with a static IP address of 172.29.0.7 and will be accessed from outside of the ACI fabric too. Notes we are not using ‘kubectl proxy’ to access the dashboard, we are providing the access natively via MetalLB LoadBalancer service, this goes for both applications.

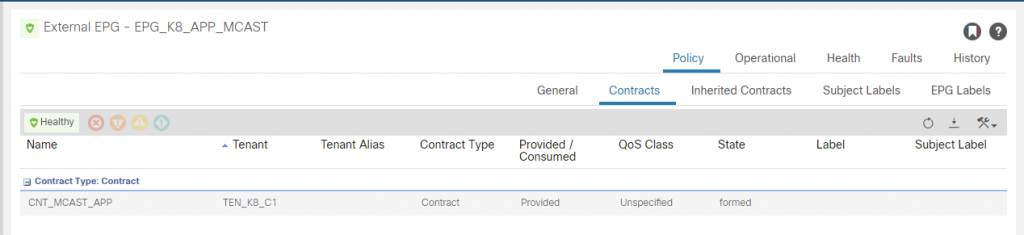

First we create an EEPG in the L3O_K8_C1 L3O and add the LoadBalancer IP address we statically assigned to the service and we will also add the pod IP addresses for the multicast application pods, I am only adding these to show the access that we have but usually we would expose only the LoadBalancer IP.

If we were not using MetalLB as a load balancer, we could add the pod IP addresses and use an external NLB and/or an ACI PBR Service Graph but we would need to make sure we have a mechanism to update these IP addresses as pods and pods IP addresses are ephemeral.

The L3O for external fabric access has this contract applied as a consumer. The contract is permitting HTTP which is what we have configured on the service.

You will also see a consumed contract for the K8 dashboard as well which permits HTTPS as per the service that was applied on K8s for the deployment, we will now configure the EEPG for the K8 dashboard.

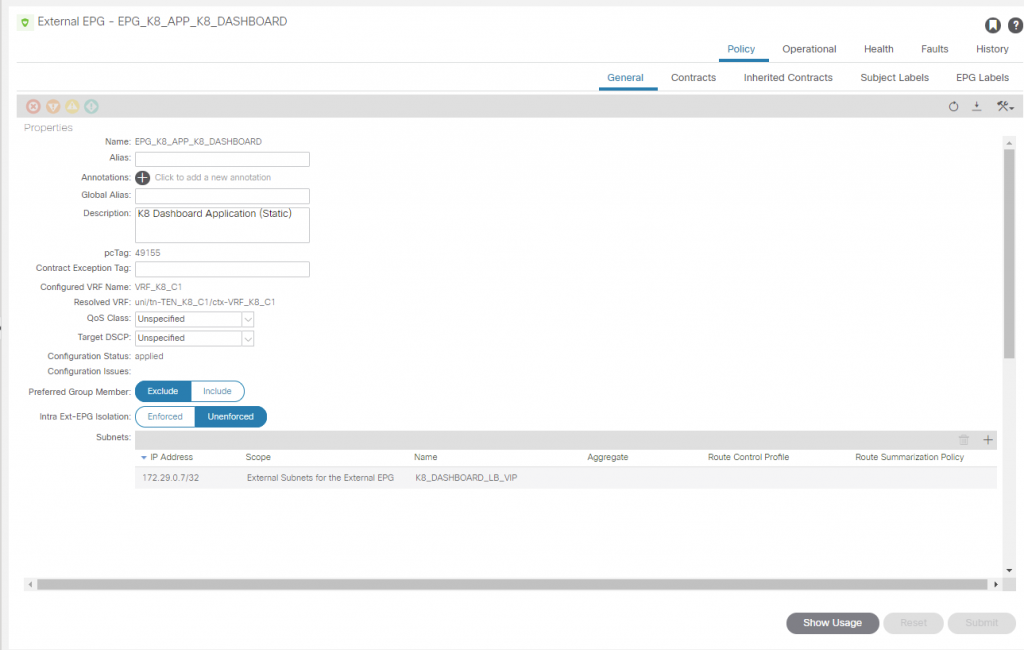

In the EEPG ‘EPG_K8_APP_K8_DASHBOARD’ we add the service load balancer static IP we configured ‘172.29.0.7/32’ and apply the scope ‘import-security’ (External Subnets for the External EPG).

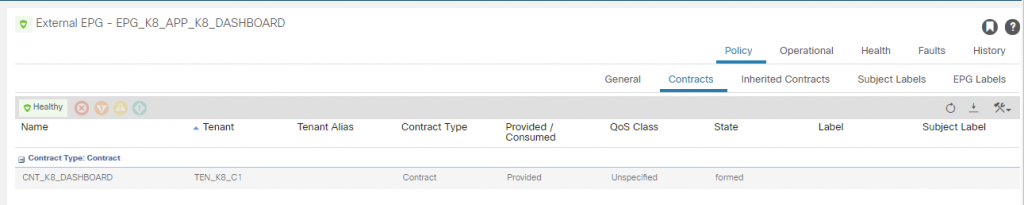

We then create a contract permitting HTTPS and apply it to the EPG_K8_APP_K8_DASHBOARD EPG to be a provider, the consumer is the ‘L3O_EXTERNAL’ EEPG ‘EPG_DEFAULT’.

With all this in place we should now have two functioning applications.

First K8 dashboard using the load balancer IP of 172.29.0.7 we specified.

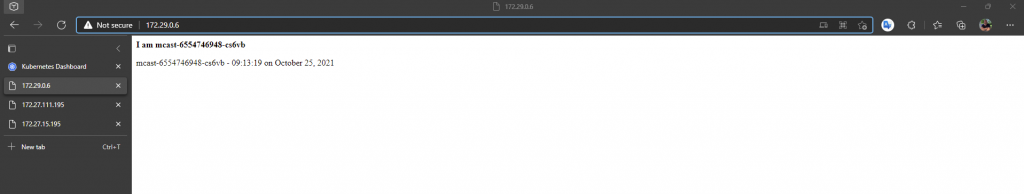

Next lets look at the multicast application using the static load balancer IP 172.29.0.6 we assigned. Recall the multicast application emits multicast packets and also listens for them too on a specific multicast address. It then lists all sources on a web page which is provided on each pod. We only expect to see one source using Calico currently, as multicast is not yest supported (Q3-2021), so we only see the source of the pod that we are redirected to by the load balancer.

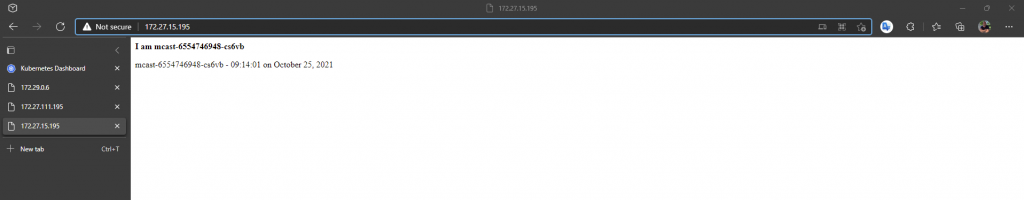

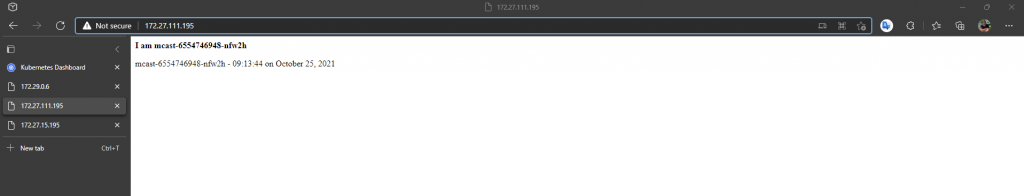

We also added the mtulicast pod IP addresses into the EEPG for the multicast application, so we should be able to access each pod directly too. We have two pods deployed, so checking each pod on port 80.

Wrap Up

There is no integration between ACI and the Calico CNI, the ‘connection’ is vanilla IP/BGP, which makes things simple. Calico provides a boat load more configuration options that we have touched on here and is actively being developed for both on-premise and cloud (AWS, Azure, GCP) environments which is great in terms of providing a level of consistency in hybrid cloud environments. In terms of the ‘lack of integration’ between ACI and Calico, well this can be solved with some code to provide a satisfactory level of integration which I will briefly discuss in Part 2 along with other thoughts about Calico and MetalLB.

Configuration Files (Git)

simonbirtles/aci-calico-metallb: ACI, Calico and MetalLB (github.com)

References

MetalLB, bare metal load-balancer for Kubernetes (universe.tf)

Cluster Networking | Kubernetes

Command_interface_examples · Wiki · labs / BIRD Internet Routing Daemon · GitLab (nic.cz)

projectcalico/bird: Calico’s fork of the BIRD protocol stack (github.com)

BIRD User’s Guide: Filters (network.cz)

kubernetes/dashboard: General-purpose web UI for Kubernetes clusters (github.com)