Cisco ACI Inband Management

I have seen various ACI inband management documents which cover this topic but yet to find one with all the required information with the additional EPG’s for access to vCenter, syslog, ntp, etc that is clear. If you have read any of my posts you will know I am a fan of configuring the APIC directly via REST for many reasons and management is no different. I have standard XML I reuse for every deployment which I can quickly modify for the specific customers build and POST to the APIC without concern that I have missed something. The XML in this blog comes from that very collection albeit with some tweaks.

Configuring the APIC for ACI inband management consists of a specific ‘inband’ EPG which is only available in the ‘mgmt’ tenant, this inband EPG will contain endpoints just like any other EPG but these endpoints are the APIC’s, leafs and spines only. We configure each device with an IP address in the inband subnet within the ‘mgmt’ tenant ‘static node addresses’ (mgmtRsInBStNode). This inband EPG (mgmtInB) is assigned a VLAN ID which will be added to the APIC’s ‘bond0′ (that is bond’zero’) interface as a sub-interface. The ‘bond0’ interface is the fabric connected interface(s) and is automatically configured when the APIC is setup , it is configured as a trunk port and will already be carrying the infra VLAN you specified during the first APIC setup in CLI. Unless you have a good reason not to, this infra VLAN should be the recommended 3967 (the magic VLAN ID). If you open a shell prompt to the APIC and run ‘ifconfig | grep bond0’ you will see a sub-interface for the infra VLAN of bond0.3967. Doing the same after configuring inband management will show an additional sub-interface with the VLAN ID you configured for inband management, i.e. ‘bond.3900’ if you used VLAN 3900 for the inband VLAN (we will look at this later). All the mgmt ports on the leafs and spines will also be configured internally with this VLAN (note the mgmt port itself is an L3 access port) and will have the IP address assigned as configured in the APIC.

We do need to consider the APIC fabric connections to the leaf switches though, the ports the APIC’s are connected to on the leaf switches require Interface Policy Groups, VLAN Pools, Physical Domains, AEP, Leaf and Interface Selectors. This seems a bit odd at first glance, why would we need to assign an interface policy group to the leaf ports the APIC is connected to? These are already working, we have the APIC using these ports on the infra VLAN to configure the fabric (i.e. why assign the port speed again)! Obviously the APIC and leaf switch already know the interface speed, cdp, lldp requirements etc! The only reason I can think is that we have to follow the policy model and the APIC Infra policies are hidden behind the scenes as they cant be changed. So we configure them and apply them to the APIC connected ports because if we don’t – it wont work ! One point to note, we do not connect this configuration to the inband EPG like we do on a normal EPG by creating a ‘Domain Association’, it is of course connected to the leaf interfaces via the policy chain from the interface policy group to the interface selector and onwards to the leaf profile.

So we perform the following tasks:

• Configure the leaf ports to which the APIC’s are already connected for the inband VLAN

• Configure the management tenant inband EPG and inband IP addresses for the APIC’s, leafs and spines

• Configure the additional normal EPG which we have vCenter / NTP / SNMP / etc. servers connected.

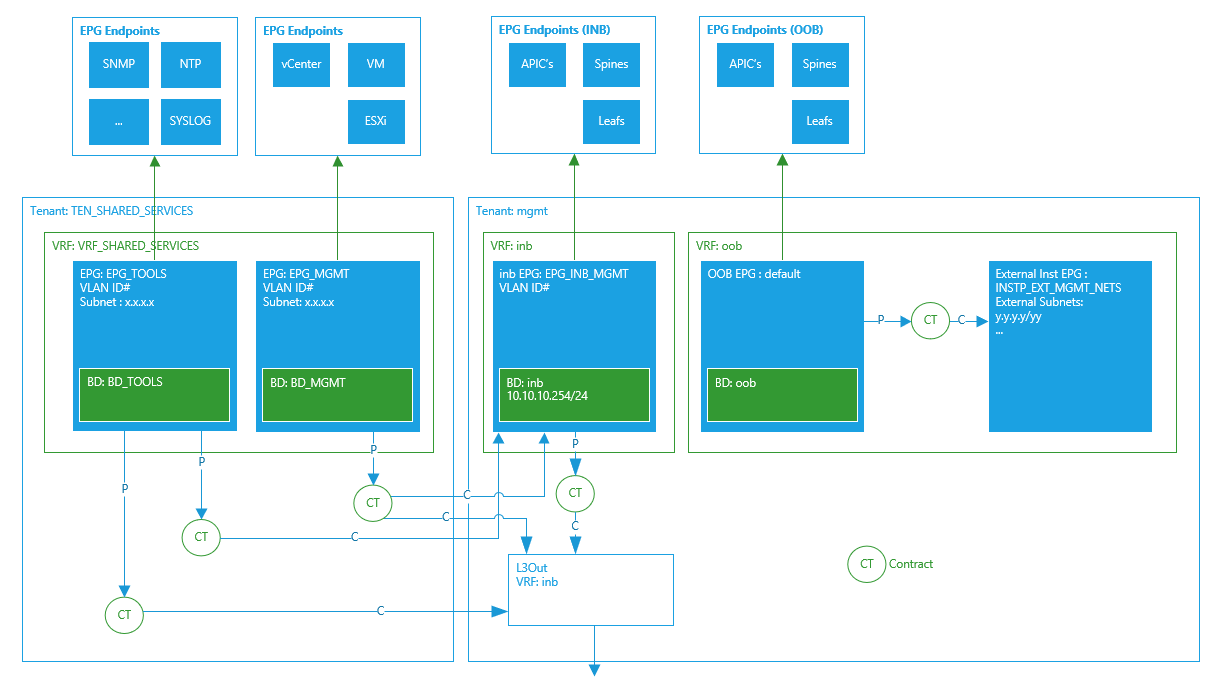

Target State

The target state logically is shown in the following diagram. The inband (inb) EPG uses the ‘inb’ bridge domain which is part of the ‘inb’ VRF (context), in reality the EPG does not sit (a child of) in the VRF and nor does the BD sit inside (or as a child) of the EPG, this diagram represents a pseudo-logical structure of the objects instead of the actual object relationships. The ‘inb’ EPG has endpoints like any EPG, this ‘inb’ EPG is a specific inband EPG and contains only the fabric endpoints (APIC, leaf & spine switches) which are addressed from the subnet configured in the BD subnet. Just having the fabric endpoints, the inband EPG, BD and subnet and APIC fabric leaf ports configured would allow access from any fabric device to another fabric device over the mgmt:inb VRF.

We have an additional requirement for VMM integration, as its VMware we need to enable communication between vCenter and the APIC, for this blog we will use a deployment that has the vCenter connected to the leaf switches (inband) and not connected outside of the ACI fabric via the OOB network, the choice between the two deployment models is a subject in itself !

As only the the fabric endpoints can be in the ‘inb’ EPG; we create another EPG (a normal application one this time) but in a separate tenant and separate VRF. This is so we can allow other non fabric admins access to the new tenant and not have to provide admin access to the ‘mgmt’ tenant, this tenant is named ‘TEN_SHARED_SERVICES’ (SS) and the VRF the same but with the VRF prefix.

The EPG_MGMT is where we will have the vCenter server connected and in addition all ESXi hosts and VM management interfaces, basically this EPG is the VMware management network. We create a contract in the SS tenant which allows HTTPS access and have the EPG_MGMT provide and export this contract to the ‘mgmt’ tenant. The EPG_INB_MGMT (inband EPG) will consume this contract interface which allows vCenter and the APIC to communicate, the L3Out in mgmt will also consume this contract interface to provide a means for the VMware admins to access vCenter from outside of the fabric via the inband network.

APIC Connected Ports

We first need to create an Interface Policy Group, Physical Domain, AEP, static VLAN Pool, Leaf Selectors and Interface Selectors for the APIC connected ports. We will do this using XML which is provided below. The following diagram shows the object model for the creation of the policy we need. This is no different that usual except we don’t have a reference the domain (physical in this case) in the inband EPG configuration. What we see in this diagram is what will be configured by POSTing the XML below.

The XML that follows firstly creates the physical domain ‘PHYSDOM_INB’ and references a VLAN Pool ‘VLANP_INB’ which we have not created yet. We can do this in ACI and its legal, it wont work of course as the pool does not exist but will show a state as ‘missing-target’ which is fine as we will create this soon and the state will change to ‘formed’.

We next create the interface selectors, which is just the port numbers that the APIC is connected to. We have the APIC connected to port 1/48 on the leaf switches, following this we assign the interface selector we just created to the leaf node profiles (the leaf switches) using the relationship object ‘infraRsAccPortP’. We should already have the switches created with the correct names, I will have run a POST prior to this configuration to setup all the leaf profiles, if you have not and POST this XML it will create the leaf profile but it will be missing the node id child object, you can add this to this XML if you wish but its better if you follow a structured method to configure the APIC rather than try to squeeze all configuration in a few XML POST’s.

Next the VLAN Pool which when created will allow the physical domain to reference this valid object and the state of the relationship will be ‘formed’. Then we create the AEP and bind it to the domain as usual.

Finally we create the Interface Policy Group (IPG) for the APIC ports. This configuration relies on the individual interface protocol policies are already created. The ones listed in this policy have previously been created as part of my setup script for the APIC. I ensure for each configuration option for the IPG that there is an option that can be selected instead of ‘default’ with the exception of the monitoring policy. When looking at a IPG and seeing default, what does default mean ? you may know for some or even all of the policy options but has someone change the default? Its just not worth it, so I ensure for every IPG created an option is selected, the defaults are just new objects with a meaningful name and other possible options also created. Its in an XML to post in when I configure fabrics, so it take seconds and scripts later create IPGs with the correct settings and consistency. Of course change these to your policy names or use mine which are on my github.

<polUni>

<physDomP name="PHYSDOM_INB">

<infraRsVlanNs tDn="uni/infra/vlanns-[VLANP_INB]-static" />

</physDomP>

<infraInfra>

<!-- APIC Interface Selectors -->

<infraAccPortP dn="uni/infra/accportprof-INTP_APIC" name="INTP_APIC">

<!-- APIC Leaf Interfaces -->

<infraHPortS name="APIC" type="range">

<infraRsAccBaseGrp tDn="uni/infra/funcprof/accportgrp-IPG_ACC_INB_APIC" />

<infraPortBlk fromCard="1" fromPort="48" name="block2" rn="portblk-block2" toCard="1" toPort="48" />

</infraHPortS>

</infraAccPortP>

<!-- Connect the above interfaces to the correct leafs the apics are connected to -->

<infraNodeP dn="uni/infra/nprof-LEAF_201">

<infraRsAccPortP tDn="uni/infra/accportprof-INTP_APIC" />

</infraNodeP>

<infraNodeP dn="uni/infra/nprof-LEAF_202">

<infraRsAccPortP tDn="uni/infra/accportprof-INTP_APIC" />

</infraNodeP>

<infraNodeP dn="uni/infra/nprof-LEAF_203">

<infraRsAccPortP tDn="uni/infra/accportprof-INTP_APIC" />

</infraNodeP>

<infraNodeP dn="uni/infra/nprof-LEAF_204">

<infraRsAccPortP tDn="uni/infra/accportprof-INTP_APIC" />

</infraNodeP>

<infraNodeP dn="uni/infra/nprof-LEAF_303">

<infraRsAccPortP tDn="uni/infra/accportprof-INTP_APIC" />

</infraNodeP>

<infraNodeP dn="uni/infra/nprof-LEAF_304">

<infraRsAccPortP tDn="uni/infra/accportprof-INTP_APIC" />

</infraNodeP>

<!-- INB VLAN POOL -->

<fvnsVlanInstP name="VLANP_INB" descr="Inband Management VLAN Pool" allocMode="static">

<fvnsEncapBlk name="BLK_V3900" descr="" allocMode="inherit" from="vlan-3900" to="vlan-3900" />

</fvnsVlanInstP>

<!-- INB AEP -->

<infraAttEntityP name="AEP_INB" descr="AEP for ACI Inband Management">

<!-- Attach to Domain (Physical Domain) -->

<infraRsDomP tDn="uni/phys-PHYSDOM_INB" />

</infraAttEntityP>

<!-- Interface Policy Group Access -->

<infraFuncP>

<!-- Bare Metal Host Leaf Access Port Policy Group -->

<infraAccPortGrp name="IPG_ACC_INB_APIC" descr="Interface Policy Access Group - Inband Management Ports">

<infraRsHIfPol tnFabricHIfPolName="LINK_10G" />

<infraRsCdpIfPol tnCdpIfPolName="CDP_ON" />

<infraRsMcpIfPol tnMcpIfPolName="MCP_ON" />

<infraRsLldpIfPol tnLldpIfPolName="LLDP_OFF" />

<infraRsStpIfPol tnStpIfPolName="STP_BPDU_GUARD_FILTER_ON" />

<infraRsStormctrlIfPol tnStormctrlIfPolName="STORMCONTROL_ALL_TYPES" />

<infraRsL2IfPol tnL2IfPolName="L2_VLAN_SCOPE_GLOBAL" />

<infraRsL2PortSecurityPol tnL2PortSecurityPolName="PORT_SECURITY_DISABLED" />

<!-- Data Plane Policy -->

<infraRsQosDppIfPol tnQosDppPolName="DPP_NONE" />

<infraRsQosEgressDppIfPol tnQosDppPolName="DPP_NONE" />

<infraRsQosIngressDppIfPol tnQosDppPolName="DPP_NONE" />

<!-- Monitoring Policy -->

<infraRsMonIfInfraPol tnMonInfraPolName="" />

<!-- Fibre Channel - FCOE -->

<infraRsFcIfPol tnFcIfPolName="FC_F_PORT" />

<infraRsQosPfcIfPol tnQosPfcIfPolName="PFC_AUTO" />

<infraRsQosSdIfPol tnQosSdIfPolName="SLOW_DRAIN_OFF_DISABLED" />

<infraRsL2PortAuthPol tnL2PortAuthPolName="8021X_DISABLED" />

<!-- 3.1 add firewall &amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp; copp policy -->

<!-- AEP -->

<infraRsAttEntP tDn="uni/infra/attentp-AEP_INB" />

</infraAccPortGrp>

<!-- end Bare Metal Host Leaf Access Port Policy Group -->

</infraFuncP>

</infraInfra>

</polUni>

Management Tenant In-band EPG Configuration

The inband mgmt EPG (mgmtInB) is a special EPG which contains only the ACI fabric devices (APIC’s & switches). This EPG is isolated like all other EPGs when first created and no contracts applied so acts otherwise like a normal EPG. We assign a bridge domain and create a contract along with the creation of the EPG, we also assign IP addresses to the fabric devices. The XML for configuration follows the discussion on configuration steps.

In the XML below, we first create a contract, this contract will be provided by the inb mgmt EPG, the contract will be consumed by a L3Out created in the mgmt tenant specifically for access to the inb network from outside of the fabric. The contract I have given here is using the common default filter, this usually would have HTTPS, SSH, SNMP for access to the APIC and switches. If you are providing services from outside the fabric for consumption by the fabric devices via the inband network for example, NTP, a contract should be provided by the L3Out and consumed by the inb EPG to allow this communication.

Note: The inb EPG cannot operate as a provider of a shared service, by using a shared L3Out in another tenant. To have this configuration we need to have the subnet options set to allow the subnet to be advertised externally and shared between VRF’s and also the subnet should be defined under the EPG and not bridge domain for a provider of a shared service. You can configure a subnet in the inb EPG but even if you change the subnet options (via REST – you cannot change these options via the GUI) the subnet will be advertised out of the L3Out. You can share an existing physical gateway and dot1q the inb L3Out and any other in different tenants/vrfs you want to.

The bridge domain uses is the built in ‘inb’ bridge domain and we assign an IP network to this BD that encompasses the IP addresses we will assign to the fabric devices. Nothing else is required here. You should assign all nodes with in-band addressing as well as APIC’s. If you have an APIC with in-band addressing assigned and do not have the connected leaf assigned with an in-band address, the inb EPG will not be deployed to the APIC connected switch and in-band for the APIC will not work. The inb EPG is indeed special in a number of ways, this is another one of them.

The mgmt EPG is created in the management profile (mgmtMgmtP) of the mgmt tenant, the EPG (mgmtInB) is assigned the ‘inb’ BD, a VLAN ID as discussed earlier and also provides the contract we created called ‘CNT_INB_MGMT’. We now need to assign IP addresses to the fabric devices on the inband network. There are two methods to achieve this depending on the size of your network and the ease of administration you prefer.

We can manually assign the IP addresses to the nodes as shown below, this works on a small network, well it still works on a large network especially if you script the installation using a script to produce the XML of the inb ip addressing.

Assign a IP address pool for the purposes of auto assignment to new devices that appear on the fabric, this is not DHCP, its a method to permanently assign a new IP address to a new fabric device without manually intervention and the IP address is dynamically taken from the IP pool you provide.

The big difference between the two methods is that the static method (#1) only assigns what you provide, this is fine when you first build the fabric and know what switches (or APICs) will be added but when you add new devices you have to manually add them. You need to add the new devices to the fabric anyway (fabricNodeIdentP) , so if you have a script to create these new devices, enhance it slightly to add the inb and oob addressing. I use a script that produces and posts all XML configuration required to add one or more nodes for fabric membership (fabricNodeIdentP), inbmgmt and oobmgmt which takes care of all the tasks required to add a node to the fabric. I am using method #1 here for the very reasons I have just described. I will add that you should what the assignment of the last octet to your nodes, if you are trying to assign the last octet to match the node number, at first glance this makes sense, its easy to remember but think about scaling, if you start your leafs at 200, that gives you a limit of 254, so when you add node 255 what do you assign that node? The convention then looses any relevance and gets confusing as some match the node id and some don’t. In my view its better to start at low numbers to give you room to grow in your assigned subnet, when you scale it wont change the assignment methodology and wont cause confusion or frustration.

<polUni>

<fvTenant name="mgmt">

<!-- Create the Contract for inb management from outside the fabric -->

<vzBrCP name="CNT_INB_MGMT" scope="context">

<vzSubj name="SUBJ_INB_MGMT">

<!-- common/default filter, update to your requiremets the common/default is fully open ! -->

<vzRsSubjFiltAtt directives="" tnVzFilterName="default" />

</vzSubj>

</vzBrCP>

<!-- Assign IP Subnet to BD - inb -->

<fvBD name="inb">

<fvSubnet ip="10.10.10.254/24" />

</fvBD>

<mgmtMgmtP name="default">

<!-- Create the Inband mgmt EPG -->

<mgmtInB encap="vlan-3900" name="EPG_INB_MGMT">

<!-- Assign mgmt BD - inb -->

<mgmtRsMgmtBD tnFvBDName="inb" />

<!-- This Inband mgmt EPG is a provider of the contract -->

<fvRsProv tnVzBrCPName="CNT_INB_MGMT" />

<!-- APIC INB Addresses -->

<!-- APIC Nodes -->

<mgmtRsInBStNode addr="10.10.10.1/24" gw="10.10.10.254" tDn="topology/pod-1/node-1" />

<mgmtRsInBStNode addr="10.10.10.2/24" gw="10.10.10.254" tDn="topology/pod-1/node-2" />

<mgmtRsInBStNode addr="10.10.10.3/24" gw="10.10.10.254" tDn="topology/pod-1/node-3" />

<!-- repeat as required...... -->

<!-- Spine Nodes -->

<mgmtRsInBStNode addr="10.10.10.11/24" gw="10.10.10.254" tDn="topology/pod-1/node-101" />

<mgmtRsInBStNode addr="10.10.10.12/24" gw="10.10.10.254" tDn="topology/pod-1/node-102" />

<mgmtRsInBStNode addr="10.10.10.13/24" gw="10.10.10.254" tDn="topology/pod-1/node-103" />

<mgmtRsInBStNode addr="10.10.10.14/24" gw="10.10.10.254" tDn="topology/pod-1/node-104" />

<!-- repeat as required...... -->

<!-- Leaf Nodes -->

<mgmtRsInBStNode addr="10.10.10.21/24" gw="10.10.10.254" tDn="topology/pod-1/node-201" />

<mgmtRsInBStNode addr="10.10.10.22/24" gw="10.10.10.254" tDn="topology/pod-1/node-202" />

<mgmtRsInBStNode addr="10.10.10.23/24" gw="10.10.10.254" tDn="topology/pod-1/node-203" />

<!-- repeat as required...... -->

</mgmtInB>

</mgmtMgmtP>

</fvTenant>

</polUni>

Shared Services

Depending on the customer requirements I may opt to create a tenant called “TEN_SHARED_SERVICES” and provide access to various infrastructure tools or services through this tenant. Why do this when the common tenant is meant to provide this? The common tenant allows all other tenants to consume services from it with less security in terms of what a tenant is allowed to consume, this doesn’t really matter if you have a single small administration team that understands what tenants should and should not consume but where this becomes a problem is in highly secure environments and when administration control is split up between different teams which is what we are trying to achieve somewhat (let the app guys configure the network from a app point of view – ‘ACI’). I am not saying never use the common tenant, I just use this shared services tenant as I have designed and built high secure environments with ACI and require the security of explicitly exporting a contract to a tenant to allow the consumption of the contract.

In this tenant I create an EPG for the VMware vCenter management network consisting of the ESXI hosts and vCenter. This EPG provides the ability for vCenter to communicate with the ESXi hosts and will also provide a contract to allow inbound connections from outside the fabric to connect to vCenter via HTTPS. This is inband management for VMware. I will assume the Tenant, VRF, BD and EPG are already created as these are common tasks and hopefully you are familiar with creating these.

vCenter In-band Management Issues

vCenter integration with the APIC allows the APIC to query vCenter when a new VM is booted to understand what EPG the VM traffic should be a member of. This process must happen before the VM is allowed access to the network as it must be part of an EPG. You can statically assign ports and therefore VLAN ID’s to an EPG so when a VM boots and traffic is received by the port tagged with a dot1q tag the APIC and leaf switch knows what EPG to assign the traffic to. This of course is not scalable to 1000’s of VM’s. The issue with being dynamically assigned is that vCenter is now traditionally a virtual appliance – a virtual machine on an ESXi host. The problem is that when the vCenter VM boots, the APIC needs to contact vCenter in order to ascertain what EPG the VM should be a member and this can’t happen as its vCenter we are trying to boot up !

We can solve this a few ways when vCenter is connected in-band of the ACI fabric.

- Agree with the VMware admins that vCenter will only be hosted on a few select ESXi hosts and statically configure the ESXi ports and VLAN ID in the VMware management EPG for each ESXi host that vCenter will reside in. We then allow the APIC and vCenter to resolve the EPGs for all other ESXi hosts dynamically.

- When configuring the VMM domain in the VMware management EPG, use the ‘pre-provision’ option with the VLAN ID to ensure all ports associated with this VMM domain have the EPG and VLAN ID configured on before any device is connected to these ports. This makes the VMware management EPG available to any device connected to these ports. This can be a security issue for some companies so make yourself aware of the implications of this.

- Use a vSwitch instead of DVS for management only, this requires all ESXi hosts management interfaces to be different physical interfaces from the DVS connected interfaces. The use of a APIC physical domain is required instead of a VMM domain as we are configuring things statically for management. You still need a VMM domain applied to other EPG’s for normal traffic other wise you have no integration.

We will implement option 2 – ‘pre-provisioning’ for all ports in the VMM domain. The snippet below shows the important components of the EPG VMM domain association with regards to the attributes [instrImedcy, resImedcy], the ‘resImedcy’ MUST be set to ‘pre-provision’, the attribute ‘instrImedcy’ helps speed things up by ensuring the port has the config pre-programmed, the first attribute ensures the switch has the config. We also need to statically assign the VLAN ID, so set the ‘encapPref’ to “encap” and the “encap” to the VLAN ID for management. We of course have the ‘tDn’ referencing the VMM domain for this EPG. The VMM domain is connected to an AEP which is connected to the assigned leafs and ports via the Interface Policy Group.

<fvRsDomAtt

instrImedcy="immediate"

resImedcy="pre-provision"

classPref="encap"

encap="vlan-3900"

encapMode="auto"

mode="default"

tDn="uni/vmmp-VMware/dom-VC_SDOM12"

/>

Hi Simon, great article !! Loved it !! I do have a question about inband/oob traffic flow for APICs

So in your article, you described how APIC can communicate with vCenter through inband with the following traffic flow:

apic -> leaf -> spine -> another leaf -> vCenter

What about OOB traffic flow ? if I have NTP/DNS/SNMP on the OOB network and needs to reach them via my APIC OOB network, how do I do that ? My initial thought is to change the default route on the APIC to use OOB gateway instead of INB gateway ( this is done by change the APIC connectivity preference setting in the global settings )

The above would break my inband connections. Is there a way for me to specifically tell APIC that “if you need to reach the vCenter IP/Network, use your inband interface” ?

Thanks in advance

Peter

PS: Through my testing, I noticed that if I use OOB as my APIC default gateway, my inband still works as long as I specify the source interface when I initiate the traffic on the APIC.

If I have a server inside ACI pinging the APIC inband, it will work without problems, which tells me that the APIC is already configured with a mechanism to “send return traffic the same interface that it was received on”

Simon,

I’m having some problems figuring out how solution 1 and 2 for the inband vCenter management issue should work.

As far as i know the APIC creates a VDS on the vCenter Server on which it creates a port-group per EPG associated with the VMM domain of the vCenter Server. This would mean that at startup of the vCenter Server, this VDS would not yet exist.

Both options seem to require at least two NIC’s connected to the fabric, one for the management EPG and one for the uplink of the VDS created by the APIC. Unless the vCenter Server is moved to the VDS created by the APIC after connecting the management EPG through a non-APIC VDS or vSwitch. This could potentially lead to loss of connection with the vCenter Server.

It’s somewhat of a chicken and egg problem. I don’t really see how pre-provisioning would solve this problem, as the vSwitch needed to connect the vCenter Server to the fabric would still occupy the uplink needed by the VDS created by the APIC.

Is there something that I’m missing?

Sorry if this question may seem a bit strange, I’m pretty new to Cisco ACI.

I’m looking forward to your answer.

Kind regards,

Kevin

Hi Kevin,

From what you say, it seems hat you have ESXi servers with one interface or a single interface bundle. With a single interface configuration for VM MGMT, PROD VLANS and VMOTION it will be a challenge, the generally recommended approach by VMware is to have separate physical interfaces (or bundled / teamed) for each of these functions. Where you only have a single interface carrying all these functions you will have this issue as you state. The moving of vCenter from the vSwitch to the DVS will cause a disconnect as you say, but in terms of ACI integration this will not be an issue for a short period as long as there are no DRS moves in progress, VMs booting or creation or modification of EPGs/BDs on ACI or any other related VM configuration on ACI until vCenter is backup on the correct switch and VLAN.

The approach I usually use is a dedicated interface pair (team/bundle) for VM MGMT on a vSwitch with static port configuration in the VM MGMT EPG, this is not an additional pain as we configure the ESXi host with physical ports when we connect the host and just additionally add the same ports to the VM MGT EPG (usually all done with scripts anyway). This keeps management access stable and outside of any dependency on ACI to vCenter configuration. Then for the PROD and vMotion teamed/bundelled ports these are dynamic in that they are connected to ACI integrated DVS. Sometimes, vMotion can be statically configured too as this is the same as VM MGMT in that its one single static configuration per ESXi host. The PROD VLANs / PG are the important ones to be dynamic to reduce the amount of port\policy configuration to only where its needed to reduce resource usage on the leaf switches.

I don’t think your missing anything but it sounds like the setup you refer to isn’t the optimal in terms of recommended setup although we don’t always have that luxury and sometimes are restricted in terms of available resources (ESXi host interfaces in your case) and have to find workarounds.

Hope that helps !

Simon